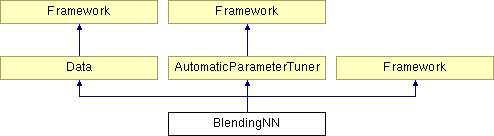

BlendingNN Class Reference

#include <BlendingNN.h>

Public Member Functions | |

| BlendingNN () | |

| ~BlendingNN () | |

| void | readSpecificMaps () |

| void | init () |

| void | train () |

| double | calcRMSEonProbe () |

| double | calcRMSEonBlend () |

| void | saveBestPrediction () |

| void | loadWeights () |

| void | predictEnsembleOutput (REAL **predictions, REAL *output) |

Private Attributes | |

| NN ** | m_nn |

| REAL ** | m_fullPredictionsReal |

| REAL ** | m_inputsTrain |

| REAL ** | m_inputsProbe |

| REAL * | m_meanBlend |

| REAL * | m_stdBlend |

| REAL * | m_predictionCache |

| int | m_nPredictors |

| vector< string > | m_usedFiles |

| int | m_epochs |

| int | m_epochsBest |

| int | m_minTuninigEpochs |

| int | m_maxTuninigEpochs |

| int | m_nrLayer |

| int | m_batchSize |

| double | m_offsetOutputs |

| double | m_scaleOutputs |

| double | m_initWeightFactor |

| double | m_learnrate |

| double | m_learnrateMinimum |

| double | m_learnrateSubtractionValueAfterEverySample |

| double | m_learnrateSubtractionValueAfterEveryEpoch |

| double | m_momentum |

| double | m_weightDecay |

| double | m_minUpdateErrorBound |

| double | m_etaPosRPROP |

| double | m_etaNegRPROP |

| double | m_minUpdateRPROP |

| double | m_maxUpdateRPROP |

| bool | m_enableRPROP |

| bool | m_useBLASforTraining |

| string | m_neuronsPerLayer |

| string | m_weightFile |

Detailed Description

Train a neural network with k-fold cross-validation in order to blend the existing fullPredictions after training Fullpredictions are existing predictions of the training set from modelsThis can be used to replace the linear blender It should maybe? deliver better results in terms of RMSE

Definition at line 21 of file BlendingNN.h.

Constructor & Destructor Documentation

| BlendingNN::BlendingNN | ( | ) |

Constructor

Definition at line 8 of file BlendingNN.cpp.

00009 { 00010 cout<<"BlendingNN"<<endl; 00011 00012 // init member vars 00013 m_nn = 0; 00014 m_fullPredictionsReal = 0; 00015 m_inputsTrain = 0; 00016 m_inputsProbe = 0; 00017 m_meanBlend = 0; 00018 m_stdBlend = 0; 00019 m_predictionCache = 0; 00020 m_nPredictors = 0; 00021 m_epochs = 0; 00022 m_epochsBest = 0; 00023 m_minTuninigEpochs = 0; 00024 m_maxTuninigEpochs = 0; 00025 m_nrLayer = 0; 00026 m_batchSize = 0; 00027 m_offsetOutputs = 0; 00028 m_scaleOutputs = 0; 00029 m_initWeightFactor = 0; 00030 m_learnrate = 0; 00031 m_learnrateMinimum = 0; 00032 m_learnrateSubtractionValueAfterEverySample = 0; 00033 m_learnrateSubtractionValueAfterEveryEpoch = 0; 00034 m_momentum = 0; 00035 m_weightDecay = 0; 00036 m_minUpdateErrorBound = 0; 00037 m_etaPosRPROP = 0; 00038 m_etaNegRPROP = 0; 00039 m_minUpdateRPROP = 0; 00040 m_maxUpdateRPROP = 0; 00041 m_enableRPROP = 0; 00042 m_useBLASforTraining = 0; 00043 }

| BlendingNN::~BlendingNN | ( | ) |

Destructor

Definition at line 48 of file BlendingNN.cpp.

00049 { 00050 cout<<"descructor BlendingNN"<<endl; 00051 00052 for ( int i=0;i<m_usedFiles.size();i++ ) 00053 delete[] m_fullPredictionsReal[i]; 00054 delete[] m_fullPredictionsReal; 00055 00056 for ( int i=0;i<m_nCross;i++ ) 00057 { 00058 if ( m_inputsTrain ) 00059 { 00060 if ( m_inputsTrain[i] ) 00061 delete[] m_inputsTrain[i]; 00062 m_inputsTrain[i] = 0; 00063 } 00064 if ( m_inputsProbe ) 00065 { 00066 if ( m_inputsProbe[i] ) 00067 delete[] m_inputsProbe[i]; 00068 m_inputsProbe[i] = 0; 00069 } 00070 } 00071 if ( m_inputsTrain ) 00072 delete[] m_inputsTrain; 00073 if ( m_inputsProbe ) 00074 delete[] m_inputsProbe; 00075 if ( m_meanBlend ) 00076 delete[] m_meanBlend; 00077 if ( m_stdBlend ) 00078 delete[] m_stdBlend; 00079 00080 for ( int i=0;i<m_nCross+1;i++ ) 00081 if ( m_nn[i] ) 00082 delete m_nn[i]; 00083 if ( m_nn ) 00084 delete[] m_nn; 00085 if ( m_predictionCache ) 00086 delete[] m_predictionCache; 00087 }

Member Function Documentation

| double BlendingNN::calcRMSEonBlend | ( | ) | [virtual] |

Reimplementation of the virtual method Not needed here

- Returns:

- 0.0

Implements AutomaticParameterTuner.

Definition at line 438 of file BlendingNN.cpp.

| double BlendingNN::calcRMSEonProbe | ( | ) | [virtual] |

Calculate the current error

- Returns:

- rmse

Implements AutomaticParameterTuner.

Definition at line 359 of file BlendingNN.cpp.

00360 { 00361 for ( int i=0;i<m_nCross;i++ ) 00362 { 00363 cout<<"."<<flush; 00364 00365 // one gradient descent step (one epoch) 00366 m_nn[i]->trainOneEpoch(); 00367 00368 // predict the probe samples 00369 for ( int j=0;j<m_probeSize[i];j++ ) 00370 m_nn[i]->predictSingleInput ( m_inputsProbe[i] + j*m_nPredictors*m_nClass*m_nDomain, m_predictionCache + m_probeIndex[i][j]*m_nClass*m_nDomain ); 00371 } 00372 /*REAL* tmp = new REAL[m_nPredictors*m_nClass]; 00373 for(int i=0;i<m_nTrain;i++) 00374 { 00375 for(int j=0;j<m_nPredictors;j++) 00376 for(int k=0;k<m_nClass;k++) 00377 tmp[j*m_nClass+k] = m_fullPredictionsReal[j][i*m_nClass + k]; 00378 m_nn[m_nCross]->predictSingleInput(tmp, m_predictionCache+i*m_nClass); 00379 } 00380 delete[] tmp;*/ 00381 00382 // calc RMSE 00383 int* wrongLabelCnt = new int[m_nDomain]; 00384 for ( int d=0;d<m_nDomain;d++ ) 00385 wrongLabelCnt[d] = 0; 00386 double rmse = 0.0, err; 00387 for ( int i=0;i<m_nTrain;i++ ) 00388 { 00389 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00390 { 00391 REAL v = m_predictionCache[i*m_nClass*m_nDomain+j]; 00392 if ( m_enablePostBlendClipping ) 00393 v = NumericalTools::clipValue ( m_predictionCache[i*m_nClass*m_nDomain+j], m_negativeTarget, m_positiveTarget ); 00394 err = v - m_trainTargetOrig[i*m_nClass*m_nDomain+j]; 00395 err = m_predictionCache[i*m_nClass*m_nDomain+j] - m_trainTargetOrig[i*m_nClass*m_nDomain+j]; 00396 rmse += err * err; 00397 } 00398 } 00399 if ( Framework::getDatasetType() ==true ) 00400 { 00401 for ( int d=0;d<m_nDomain;d++ ) 00402 { 00403 for ( int i=0;i<m_nTrain;i++ ) 00404 { 00405 REAL max = -1e10; 00406 int ind = -1; 00407 for ( int j=0;j<m_nClass;j++ ) 00408 if ( m_predictionCache[d*m_nClass+i*m_nDomain*m_nClass+j] > max ) 00409 { 00410 max = m_predictionCache[d*m_nClass+i*m_nDomain*m_nClass+j]; 00411 ind = j; 00412 } 00413 if ( ind != m_trainLabelOrig[d + i*m_nDomain] ) 00414 wrongLabelCnt[d]++; 00415 } 00416 } 00417 int nWrong = 0; 00418 for ( int d=0;d<m_nDomain;d++ ) 00419 { 00420 nWrong += wrongLabelCnt[d]; 00421 if ( m_nDomain > 1 ) 00422 cout<<"["<< ( double ) wrongLabelCnt[d]/ ( double ) m_nTrain<<"] "; 00423 } 00424 cout<<" (classError:"<<100.0* ( double ) nWrong/ ( ( double ) m_nTrain* ( double ) m_nDomain ) <<"%)"; 00425 } 00426 delete[] wrongLabelCnt; 00427 rmse = sqrt ( rmse/ ( double ) ( m_nTrain*m_nClass*m_nDomain ) ); 00428 00429 return rmse; 00430 }

| void BlendingNN::init | ( | ) |

Initialize the blend networks

Definition at line 125 of file BlendingNN.cpp.

00126 { 00127 cout<<"Init BlendingNN"<<endl<<endl; 00128 00129 // read full predictions in directory 00130 string directory = m_datasetPath + "/" + m_fullPredPath + "/"; 00131 vector<string> files = m_algorithmNameList; //Data::getDirectoryFileList(directory); 00132 m_nPredictors = 0; 00133 for ( int i=0;i<files.size();i++ ) 00134 { 00135 if ( files[i].at ( files[i].size()-1 ) != '.' && files[i].find ( ".dat" ) == files[i].length()-4 ) 00136 { 00137 //cout<<endl<<"File: "<<files[i]<<endl<<endl; 00138 m_usedFiles.push_back ( files[i] ); 00139 m_nPredictors++; 00140 } 00141 } 00142 00143 // allocte memory for fullPredictions 00144 m_fullPredictionsReal = new REAL*[m_nPredictors]; 00145 00146 // allocate memory for cross train sets 00147 m_inputsTrain = new REAL*[m_nCross+1]; 00148 m_inputsProbe = new REAL*[m_nCross]; 00149 for ( int i=0;i<m_nCross;i++ ) 00150 { 00151 m_inputsTrain[i] = new REAL[m_trainSize[i]*m_nPredictors*m_nClass*m_nDomain]; 00152 m_inputsProbe[i] = new REAL[m_probeSize[i]*m_nPredictors*m_nClass*m_nDomain]; 00153 } 00154 00155 // load the fullPredictors 00156 cout<<"Prediction files:"<<endl; 00157 for ( int i=0;i<m_usedFiles.size();i++ ) 00158 { 00159 // allocate and read 00160 m_fullPredictionsReal[i] = new REAL[m_nTrain*m_nClass*m_nDomain]; 00161 fstream f ( m_usedFiles[i].c_str(), ios::in ); 00162 if ( f.is_open() == false ) 00163 assert ( false ); 00164 f.read ( ( char* ) m_fullPredictionsReal[i], sizeof ( REAL ) *m_nTrain*m_nClass*m_nDomain ); 00165 f.close(); 00166 00167 // calc RMSE and classification error 00168 int* wrongLabelCnt = new int[m_nDomain]; 00169 for ( int d=0;d<m_nDomain;d++ ) 00170 wrongLabelCnt[d] = 0; 00171 double rmse = 0.0, err; 00172 for ( int k=0;k<m_nTrain;k++ ) 00173 { 00174 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00175 { 00176 err = m_fullPredictionsReal[i][k*m_nClass*m_nDomain + j] - m_trainTargetOrig[k*m_nClass*m_nDomain + j]; 00177 rmse += err * err; 00178 } 00179 } 00180 if ( Framework::getDatasetType() ==true ) 00181 { 00182 for ( int d=0;d<m_nDomain;d++ ) 00183 { 00184 for ( int k=0;k<m_nTrain;k++ ) 00185 { 00186 REAL max = -1e10; 00187 int ind = -1; 00188 for ( int j=0;j<m_nClass;j++ ) 00189 if ( m_fullPredictionsReal[i][d*m_nClass+k*m_nClass*m_nDomain + j] > max ) 00190 { 00191 max = m_fullPredictionsReal[i][d*m_nClass+k*m_nClass*m_nDomain + j]; 00192 ind = j; 00193 } 00194 00195 if ( m_trainLabelOrig[k*m_nDomain + d] != ind ) 00196 wrongLabelCnt[d]++; 00197 } 00198 } 00199 00200 int nWrong = 0; 00201 for ( int d=0;d<m_nDomain;d++ ) 00202 { 00203 nWrong += wrongLabelCnt[d]; 00204 if ( m_nDomain > 1 ) 00205 cout<<"["<< ( double ) wrongLabelCnt[d]/ ( double ) m_nTrain<<"] "; 00206 } 00207 cout<<" (classErr:"<<100.0* ( double ) nWrong/ ( ( double ) m_nTrain* ( double ) m_nDomain ) <<"%)"; 00208 } 00209 delete[] wrongLabelCnt; 00210 cout<<"File:"<<m_usedFiles[i]<<" RMSE:"<<sqrt ( rmse/ ( double ) ( m_nClass*m_nDomain*m_nTrain ) ); 00211 cout<<endl; 00212 } 00213 cout<<endl; 00214 00215 // mean and std 00216 m_meanBlend = new REAL[m_nPredictors*m_nClass*m_nDomain]; 00217 m_stdBlend = new REAL[m_nPredictors*m_nClass*m_nDomain]; 00218 for ( int i=0;i<m_nPredictors*m_nClass*m_nDomain;i++ ) 00219 { 00220 m_meanBlend[i] = 0.0; 00221 m_stdBlend[i] = 0.0; 00222 } 00223 for ( int i=0;i<m_nTrain;i++ ) 00224 for ( int j=0;j<m_nPredictors;j++ ) 00225 for ( int k=0;k<m_nClass*m_nDomain;k++ ) 00226 m_meanBlend[j*m_nClass*m_nDomain+k] += m_fullPredictionsReal[j][i*m_nClass*m_nDomain+k]; 00227 for ( int i=0;i<m_nPredictors*m_nClass*m_nDomain;i++ ) 00228 m_meanBlend[i] /= REAL ( m_nTrain ); 00229 for ( int i=0;i<m_nTrain;i++ ) 00230 for ( int j=0;j<m_nPredictors;j++ ) 00231 for ( int k=0;k<m_nClass*m_nDomain;k++ ) 00232 { 00233 REAL v = m_fullPredictionsReal[j][i*m_nClass*m_nDomain+k] - m_meanBlend[j*m_nClass*m_nDomain+k]; 00234 m_stdBlend[j*m_nClass*m_nDomain+k] += v * v; 00235 } 00236 for ( int i=0;i<m_nPredictors*m_nClass*m_nDomain;i++ ) 00237 m_stdBlend[i] = sqrt ( m_stdBlend[i]/REAL ( m_nTrain ) ); 00238 00239 REAL meanMin=1e10,meanMax=-1e10,stdMin=1e10,stdMax=-1e10; 00240 for ( int i=0;i<m_nPredictors*m_nClass*m_nDomain;i++ ) 00241 { 00242 if ( m_meanBlend[i]<meanMin ) 00243 meanMin = m_meanBlend[i]; 00244 if ( m_meanBlend[i]>meanMax ) 00245 meanMax = m_meanBlend[i]; 00246 if ( m_stdBlend[i]<stdMin ) 00247 stdMin = m_stdBlend[i]; 00248 if ( m_stdBlend[i]>stdMax ) 00249 stdMax = m_stdBlend[i]; 00250 } 00251 cout<<"meanMin:"<<meanMin<<" meanMax:"<<meanMax<<" stdMin:"<<stdMin<<" stdMax:"<<stdMax<<endl; 00252 00253 // apply to read fullpredictions 00254 for ( int i=0;i<m_nPredictors;i++ ) 00255 { 00256 for ( int j=0;j<m_nTrain;j++ ) 00257 for ( int k=0;k<m_nClass*m_nDomain;k++ ) 00258 m_fullPredictionsReal[i][j*m_nClass*m_nDomain+k] = ( m_fullPredictionsReal[i][j*m_nClass*m_nDomain+k]-m_meanBlend[i*m_nClass*m_nDomain+k] ) /m_stdBlend[i*m_nClass*m_nDomain+k]; 00259 } 00260 00261 00262 // copy data to cross-validation slots 00263 // the only difference here is new input data 00264 for ( int i=0;i<m_nCross;i++ ) 00265 { 00266 // slot of probeset 00267 int begin = m_slotBoundaries[i]; 00268 int end = m_slotBoundaries[i+1]; 00269 00270 int probeCnt = 0, trainCnt = 0; 00271 00272 // go through whole trainOrig set 00273 for ( int j=0;j<m_nTrain;j++ ) 00274 { 00275 int index = m_mixList[j]; 00276 00277 // probe set 00278 if ( j>=begin && j <end ) 00279 { 00280 for ( int k=0;k<m_nPredictors;k++ ) 00281 for ( int l=0;l<m_nClass*m_nDomain;l++ ) 00282 m_inputsProbe[i][probeCnt* ( m_nPredictors*m_nClass*m_nDomain ) +k*m_nClass*m_nDomain+l] = m_fullPredictionsReal[k][index*m_nClass*m_nDomain+l]; 00283 probeCnt++; 00284 } 00285 else // train set 00286 { 00287 for ( int k=0;k<m_nPredictors;k++ ) 00288 for ( int l=0;l<m_nClass*m_nDomain;l++ ) 00289 m_inputsTrain[i][trainCnt* ( m_nPredictors*m_nClass*m_nDomain ) +k*m_nClass*m_nDomain+l] = m_fullPredictionsReal[k][index*m_nClass*m_nDomain+l]; 00290 trainCnt++; 00291 } 00292 } 00293 if ( probeCnt != m_probeSize[i] || trainCnt != m_trainSize[i] ) // safety check 00294 assert ( false ); 00295 } 00296 m_inputsTrain[m_nCross] = new REAL[m_nTrain*m_nPredictors*m_nClass*m_nDomain]; 00297 for ( int i=0;i<m_nTrain;i++ ) 00298 for ( int j=0;j<m_nPredictors;j++ ) 00299 for ( int k=0;k<m_nClass*m_nDomain;k++ ) 00300 m_inputsTrain[m_nCross][i* ( m_nPredictors*m_nClass*m_nDomain ) +j*m_nClass*m_nDomain+k] = m_fullPredictionsReal[j][i*m_nClass*m_nDomain+k]; 00301 00302 00303 // construct neural networks as blender (cross fold validation) 00304 m_nn = new NN*[m_nCross+1]; 00305 for ( int i=0;i<m_nCross+1;i++ ) 00306 m_nn[i] = new NN(); 00307 00308 // create NNs 00309 for ( int i=0;i<m_nCross+1;i++ ) 00310 { 00311 cout<<"Create a Neural Network ("<<i+1<<"/"<<m_nCross+1<<")"<<endl; 00312 m_nn[i] = new NN(); 00313 m_nn[i]->setNrTargets ( m_nClass*m_nDomain ); 00314 m_nn[i]->setNrInputs ( m_nPredictors*m_nClass*m_nDomain ); 00315 m_nn[i]->setNrExamplesTrain ( i<m_nCross?m_trainSize[i]:m_nTrain ); 00316 m_nn[i]->setNrExamplesProbe ( i<m_nCross?m_probeSize[i]:0 ); 00317 m_nn[i]->setTrainInputs ( m_inputsTrain[i] ); 00318 m_nn[i]->setTrainTargets ( i<m_nCross?m_trainTarget[i]:m_trainTargetOrig ); 00319 m_nn[i]->setProbeInputs ( i<m_nCross?m_inputsProbe[i]:0 ); 00320 m_nn[i]->setProbeTargets ( i<m_nCross?m_probeTarget[i]:0 ); 00321 00322 // learn parameters 00323 m_nn[i]->setInitWeightFactor ( m_initWeightFactor ); 00324 m_nn[i]->setLearnrate ( m_learnrate ); 00325 m_nn[i]->setLearnrateMinimum ( m_learnrateMinimum ); 00326 m_nn[i]->setLearnrateSubtractionValueAfterEverySample ( m_learnrateSubtractionValueAfterEverySample ); 00327 m_nn[i]->setLearnrateSubtractionValueAfterEveryEpoch ( m_learnrateSubtractionValueAfterEveryEpoch ); 00328 m_nn[i]->setMomentum ( m_momentum ); 00329 m_nn[i]->setWeightDecay ( m_weightDecay ); 00330 m_nn[i]->setMinUpdateErrorBound ( m_minUpdateErrorBound ); 00331 m_nn[i]->setBatchSize ( m_batchSize ); 00332 m_nn[i]->setMaxEpochs ( m_maxTuninigEpochs ); 00333 00334 // set net inner stucture 00335 int nrLayer = m_nrLayer; 00336 int* neuronsPerLayer = Data::splitStringToIntegerList ( m_neuronsPerLayer, ',' ); 00337 m_nn[i]->enableRPROP ( m_enableRPROP ); 00338 m_nn[i]->setNNStructure ( nrLayer, neuronsPerLayer ); 00339 m_nn[i]->setScaleOffset ( m_scaleOutputs, m_offsetOutputs ); 00340 m_nn[i]->setRPROPPosNeg ( m_etaPosRPROP, m_etaNegRPROP ); 00341 m_nn[i]->setRPROPMinMaxUpdate ( m_minUpdateRPROP, m_maxUpdateRPROP ); 00342 m_nn[i]->setNormalTrainStopping ( true ); 00343 m_nn[i]->useBLASforTraining ( m_useBLASforTraining ); 00344 m_nn[i]->initNNWeights ( m_randSeed ); 00345 delete neuronsPerLayer; 00346 00347 cout<<endl<<endl; 00348 } 00349 00350 // prediction of train set 00351 m_predictionCache = new REAL[m_nTrain*m_nClass*m_nDomain]; 00352 }

| void BlendingNN::loadWeights | ( | ) |

Load saved weights, go into ready-to-predict mode

Definition at line 493 of file BlendingNN.cpp.

00494 { 00495 cout<<"Load weights"<<endl; 00496 00497 // load weights 00498 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile; 00499 cout<<"Load:"<<name<<endl; 00500 int n = 0; 00501 00502 // open 00503 fstream f ( name.c_str(), ios::in ); 00504 if ( f.is_open() == false ) 00505 assert ( false ); 00506 00507 // read #predictions 00508 f.read ( ( char* ) &m_nPredictors, sizeof ( int ) ); 00509 00510 // read #class 00511 f.read ( ( char* ) &m_nClass, sizeof ( int ) ); 00512 00513 // read #domain 00514 f.read ( ( char* ) &m_nDomain, sizeof ( int ) ); 00515 00516 // set up NNs (only the last one is used) 00517 m_nn = new NN*[m_nCross+1]; 00518 for ( int i=0;i<m_nCross+1;i++ ) 00519 m_nn[i] = 0; 00520 m_nn[m_nCross] = new NN(); 00521 m_nn[m_nCross]->setNrTargets ( m_nClass*m_nDomain ); 00522 m_nn[m_nCross]->setNrInputs ( m_nPredictors*m_nClass*m_nDomain ); 00523 m_nn[m_nCross]->setNrExamplesTrain ( 0 ); 00524 m_nn[m_nCross]->setNrExamplesProbe ( 0 ); 00525 m_nn[m_nCross]->setTrainInputs ( 0 ); 00526 m_nn[m_nCross]->setTrainTargets ( 0 ); 00527 m_nn[m_nCross]->setProbeInputs ( 0 ); 00528 m_nn[m_nCross]->setProbeTargets ( 0 ); 00529 00530 // learn parameters 00531 m_nn[m_nCross]->setInitWeightFactor ( m_initWeightFactor ); 00532 m_nn[m_nCross]->setLearnrate ( m_learnrate ); 00533 m_nn[m_nCross]->setLearnrateMinimum ( m_learnrateMinimum ); 00534 m_nn[m_nCross]->setLearnrateSubtractionValueAfterEverySample ( m_learnrateSubtractionValueAfterEverySample ); 00535 m_nn[m_nCross]->setLearnrateSubtractionValueAfterEveryEpoch ( m_learnrateSubtractionValueAfterEveryEpoch ); 00536 m_nn[m_nCross]->setMomentum ( m_momentum ); 00537 m_nn[m_nCross]->setWeightDecay ( m_weightDecay ); 00538 m_nn[m_nCross]->setMinUpdateErrorBound ( m_minUpdateErrorBound ); 00539 m_nn[m_nCross]->setBatchSize ( m_batchSize ); 00540 m_nn[m_nCross]->setMaxEpochs ( m_maxTuninigEpochs ); 00541 00542 // set net inner stucture 00543 int nrLayer = m_nrLayer; 00544 int* neuronsPerLayer = Data::splitStringToIntegerList ( m_neuronsPerLayer, ',' ); 00545 m_nn[m_nCross]->setNNStructure ( nrLayer, neuronsPerLayer ); 00546 00547 m_nn[m_nCross]->setRPROPPosNeg ( m_etaPosRPROP, m_etaNegRPROP ); 00548 m_nn[m_nCross]->setRPROPMinMaxUpdate ( m_minUpdateRPROP, m_maxUpdateRPROP ); 00549 m_nn[m_nCross]->setScaleOffset ( m_scaleOutputs, m_offsetOutputs ); 00550 m_nn[m_nCross]->setNormalTrainStopping ( true ); 00551 m_nn[m_nCross]->enableRPROP ( m_enableRPROP ); 00552 m_nn[m_nCross]->useBLASforTraining ( m_useBLASforTraining ); 00553 m_nn[m_nCross]->initNNWeights ( m_randSeed ); 00554 delete[] neuronsPerLayer; 00555 00556 // #weights 00557 f.read ( ( char* ) &n, sizeof ( int ) ); 00558 00559 REAL* w = new REAL[n]; 00560 00561 // read weights 00562 f.read ( ( char* ) w, sizeof ( REAL ) *n ); 00563 00564 // read mean, std 00565 m_meanBlend = new REAL[m_nPredictors*m_nClass*m_nDomain]; 00566 m_stdBlend = new REAL[m_nPredictors*m_nClass*m_nDomain]; 00567 f.read ( ( char* ) m_meanBlend, sizeof ( REAL ) *m_nPredictors*m_nClass*m_nDomain ); 00568 f.read ( ( char* ) m_stdBlend, sizeof ( REAL ) *m_nPredictors*m_nClass*m_nDomain ); 00569 00570 f.close(); 00571 00572 // init the NN weights 00573 m_nn[m_nCross]->setWeights ( w ); 00574 00575 if ( w ) 00576 delete[] w; 00577 w = 0; 00578 }

| void BlendingNN::predictEnsembleOutput | ( | REAL ** | predictions, | |

| REAL * | output | |||

| ) |

Make predictions, based on the current blending neural net (including normalization)

- Parameters:

-

predictions pointer to pointers of predictions output the output (return value)

Definition at line 586 of file BlendingNN.cpp.

00587 { 00588 REAL* tmp = new REAL[m_nPredictors*m_nClass*m_nDomain]; 00589 for ( int i=0;i<m_nPredictors;i++ ) 00590 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00591 tmp[i*m_nClass*m_nDomain+j] = ( predictions[i+1][j] - m_meanBlend[i*m_nClass*m_nDomain+j] ) / m_stdBlend[i*m_nClass*m_nDomain+j]; // +1 because the first is constant 00592 m_nn[m_nCross]->predictSingleInput ( tmp, output ); 00593 if ( m_enablePostBlendClipping ) 00594 for ( int i=0;i<m_nClass*m_nDomain;i++ ) 00595 output[i] = NumericalTools::clipValue ( output[i], m_negativeTarget, m_positiveTarget ); 00596 if ( tmp ) 00597 delete[] tmp; 00598 tmp = 0; 00599 }

| void BlendingNN::readSpecificMaps | ( | ) |

Read the Algorithm specific values from the description file

Definition at line 93 of file BlendingNN.cpp.

00094 { 00095 cout<<"Read specific maps"<<endl; 00096 00097 // read dsc vars 00098 m_minTuninigEpochs = m_intMap["minTuninigEpochs"]; 00099 m_maxTuninigEpochs = m_intMap["maxTuninigEpochs"]; 00100 m_nrLayer = m_intMap["nrLayer"]; 00101 m_batchSize = m_intMap["batchSize"]; 00102 m_offsetOutputs = m_doubleMap["offsetOutputs"]; 00103 m_scaleOutputs = m_doubleMap["scaleOutputs"]; 00104 m_initWeightFactor = m_doubleMap["initWeightFactor"]; 00105 m_learnrate = m_doubleMap["learnrate"]; 00106 m_learnrateMinimum = m_doubleMap["learnrateMinimum"]; 00107 m_learnrateSubtractionValueAfterEverySample = m_doubleMap["learnrateSubtractionValueAfterEverySample"]; 00108 m_learnrateSubtractionValueAfterEveryEpoch = m_doubleMap["learnrateSubtractionValueAfterEveryEpoch"]; 00109 m_momentum = m_doubleMap["momentum"]; 00110 m_weightDecay = m_doubleMap["weightDecay"]; 00111 m_minUpdateErrorBound = m_doubleMap["minUpdateErrorBound"]; 00112 m_etaPosRPROP = m_doubleMap["etaPosRPROP"]; 00113 m_etaNegRPROP = m_doubleMap["etaNegRPROP"]; 00114 m_minUpdateRPROP = m_doubleMap["minUpdateRPROP"]; 00115 m_maxUpdateRPROP = m_doubleMap["maxUpdateRPROP"]; 00116 m_enableRPROP = m_boolMap["enableRPROP"]; 00117 m_useBLASforTraining = m_boolMap["useBLASforTraining"]; 00118 m_neuronsPerLayer = m_stringMap["neuronsPerLayer"]; 00119 m_weightFile = m_stringMap["weightFile"]; 00120 }

| void BlendingNN::saveBestPrediction | ( | ) | [virtual] |

Save the epochs, where the error is minimal

Implements AutomaticParameterTuner.

Definition at line 447 of file BlendingNN.cpp.

| void BlendingNN::train | ( | ) |

Start the training process Training goal is mix current train predictions

Definition at line 457 of file BlendingNN.cpp.

00458 { 00459 cout<<"Start train blending NN"<<endl; 00460 00461 m_epochs = 0; 00462 addEpochParameter ( &m_epochs, "epoch" ); 00463 00464 cout<<"(min|max. epochs: "<<m_minTuninigEpochs<<"|"<<m_maxTuninigEpochs<<")"<<endl; 00465 expSearcher ( m_minTuninigEpochs, m_maxTuninigEpochs, 3, 1, 0.8, true, false ); 00466 00467 // do retraining 00468 m_nn[m_nCross]->setNormalTrainStopping ( false ); 00469 m_nn[m_nCross]->setMaxEpochs ( m_epochsBest ); 00470 int epochs = m_nn[m_nCross]->trainNN(); 00471 00472 // Save weights 00473 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile; 00474 cout<<"Save:"<<name<<endl; 00475 int n = m_nn[m_nCross]->getNrWeights(); 00476 cout<<"#weights:"<<n<<endl; 00477 REAL* w = m_nn[m_nCross]->getWeightPtr(); 00478 00479 fstream f ( name.c_str(), ios::out ); 00480 f.write ( ( char* ) &m_nPredictors, sizeof ( int ) ); 00481 f.write ( ( char* ) &m_nClass, sizeof ( int ) ); 00482 f.write ( ( char* ) &m_nDomain, sizeof ( int ) ); 00483 f.write ( ( char* ) &n, sizeof ( int ) ); 00484 f.write ( ( char* ) w, sizeof ( REAL ) *n ); 00485 f.write ( ( char* ) m_meanBlend, sizeof ( REAL ) *m_nPredictors*m_nClass*m_nDomain ); 00486 f.write ( ( char* ) m_stdBlend, sizeof ( REAL ) *m_nPredictors*m_nClass*m_nDomain ); 00487 f.close(); 00488 }

The documentation for this class was generated from the following files:

- ELF/BlendingNN.h

- ELF/BlendingNN.cpp

1.5.8

1.5.8