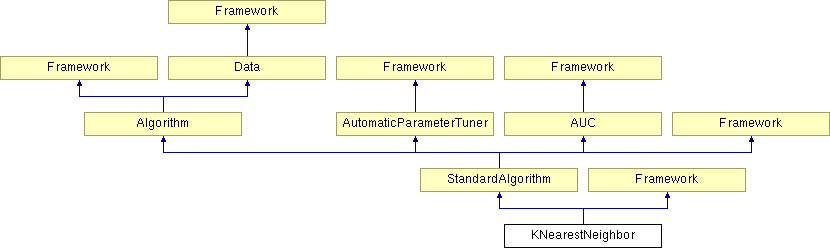

KNearestNeighbor Class Reference

#include <KNearestNeighbor.h>

Public Member Functions | |

| KNearestNeighbor () | |

| ~KNearestNeighbor () | |

| virtual void | modelInit () |

| virtual void | modelUpdate (REAL *input, REAL *target, uint nSamples, uint crossRun) |

| virtual void | predictAllOutputs (REAL *rawInputs, REAL *outputs, uint nSamples, uint crossRun) |

| virtual void | readSpecificMaps () |

| virtual void | saveWeights (int cross) |

| virtual void | loadWeights (int cross) |

| virtual void | loadMetaWeights (int cross) |

Static Public Member Functions | |

| static string | templateGenerator (int id, string preEffect, int nameID, bool blendStop) |

Private Attributes | |

| REAL ** | m_targetActualPtr |

| int * | m_lengthActual |

| bool | m_isLoadWeights |

| REAL ** | m_xNormalized |

| REAL * | m_allCorrelations |

| REAL * | m_allCorrelationsTransposed |

| REAL * | m_predictionAllOutputs |

| REAL * | m_predictionAllOutputsAllTargets |

| double | m_scale |

| double | m_scale2 |

| double | m_offset |

| double | m_globalOffset |

| double | m_power |

| int | m_k |

| double | m_euclideanPower |

| double | m_euclideanLimit |

| int | m_evaluationBlockSize |

| bool | m_enableAdvancedKNN |

| bool | m_dataDenormalization |

| bool | m_takeEuclideanDistance |

| bool | m_optimizeEuclideanPower |

Detailed Description

k-nearest neighbor model for real-valued prediction based on a distance measure in feature spaceThe class is an online k-NN prediction Algorithm This means that no precalcuated distances are stored

Pearson correlations is a matrix-matrix product (heavy load on processor with MKL) Euclidean distance is a matrix-matrix product (heavy load on processor with MKL)

Tunable parameters are the neighborhood size k and some other optional params

Definition at line 22 of file KNearestNeighbor.h.

Constructor & Destructor Documentation

| KNearestNeighbor::KNearestNeighbor | ( | ) |

Constructor

Definition at line 8 of file KNearestNeighbor.cpp.

00009 { 00010 cout<<"KNearestNeighbor"<<endl; 00011 // init member vars 00012 m_targetActualPtr = 0; 00013 m_lengthActual = 0; 00014 m_isLoadWeights = 0; 00015 m_xNormalized = 0; 00016 m_predictionAllOutputs = 0; 00017 m_predictionAllOutputsAllTargets = 0; 00018 m_scale = 0; 00019 m_scale2 = 0; 00020 m_offset = 0; 00021 m_globalOffset = 0; 00022 m_power = 0; 00023 m_k = 0; 00024 m_euclideanPower = 1.0; 00025 m_euclideanLimit = 0.01; 00026 m_evaluationBlockSize = 0; 00027 m_enableAdvancedKNN = 0; 00028 m_dataDenormalization = 0; 00029 m_takeEuclideanDistance = 0; 00030 m_optimizeEuclideanPower = 0; 00031 }

| KNearestNeighbor::~KNearestNeighbor | ( | ) |

Destructor

Definition at line 36 of file KNearestNeighbor.cpp.

00037 { 00038 cout<<"descructor KNearestNeighbor"<<endl; 00039 00040 if ( m_xNormalized ) 00041 { 00042 for ( int i=0;i<m_nCross+1;i++ ) 00043 if ( m_xNormalized[i] ) 00044 delete[] m_xNormalized[i]; 00045 delete[] m_xNormalized; 00046 } 00047 m_xNormalized = 0; 00048 if ( m_targetActualPtr ) 00049 delete[] m_targetActualPtr; 00050 m_targetActualPtr = 0; 00051 if ( m_lengthActual ) 00052 delete[] m_lengthActual; 00053 m_lengthActual = 0; 00054 }

Member Function Documentation

| void KNearestNeighbor::loadWeights | ( | int | cross | ) | [virtual] |

Load the weights and all other parameters and make the model ready to predict

Implements StandardAlgorithm.

Definition at line 536 of file KNearestNeighbor.cpp.

00537 { 00538 char buf[1024]; 00539 sprintf ( buf,"%02d",cross ); 00540 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile + "." + buf; 00541 cout<<"Load:"<<name<<endl; 00542 fstream f ( name.c_str(), ios::in ); 00543 if ( f.is_open() == false ) 00544 assert ( false ); 00545 f.read ( ( char* ) &m_nTrain, sizeof ( int ) ); 00546 f.read ( ( char* ) &m_nFeatures, sizeof ( int ) ); 00547 f.read ( ( char* ) &m_nClass, sizeof ( int ) ); 00548 f.read ( ( char* ) &m_nDomain, sizeof ( int ) ); 00549 00550 m_xNormalized = new REAL*[m_nCross+1]; 00551 m_targetActualPtr = new REAL*[m_nCross+1]; 00552 m_lengthActual = new int[m_nCross+1]; 00553 for ( int i=0;i<m_nCross+1;i++ ) 00554 { 00555 m_xNormalized[i] = 0; 00556 m_targetActualPtr[i] = 0; 00557 m_lengthActual[i] = 0; 00558 } 00559 00560 f.read ( ( char* ) &m_lengthActual[cross], sizeof ( int ) ); 00561 00562 // the train prototype matrix 00563 m_xNormalized[cross] = new REAL[m_lengthActual[cross]*m_nFeatures]; 00564 m_targetActualPtr[cross] = new REAL[m_lengthActual[cross]*m_nClass*m_nDomain]; 00565 00566 f.read ( ( char* ) m_xNormalized[cross], sizeof ( REAL ) *m_lengthActual[cross]*m_nFeatures ); 00567 f.read ( ( char* ) m_targetActualPtr[cross], sizeof ( REAL ) *m_lengthActual[cross]*m_nClass*m_nDomain ); 00568 f.read ( ( char* ) &m_scale, sizeof ( double ) ); 00569 f.read ( ( char* ) &m_scale2, sizeof ( double ) ); 00570 f.read ( ( char* ) &m_offset, sizeof ( double ) ); 00571 f.read ( ( char* ) &m_globalOffset, sizeof ( double ) ); 00572 f.read ( ( char* ) &m_k, sizeof ( int ) ); 00573 f.read ( ( char* ) &m_euclideanPower, sizeof ( double ) ); 00574 f.read ( ( char* ) &m_euclideanLimit, sizeof ( double ) ); 00575 f.read ( ( char* ) &m_maxSwing, sizeof ( double ) ); 00576 f.close(); 00577 00578 m_isLoadWeights = 1; 00579 }

| void KNearestNeighbor::modelInit | ( | ) | [virtual] |

Init the KNN model

Implements StandardAlgorithm.

Definition at line 84 of file KNearestNeighbor.cpp.

00085 { 00086 // init / add tunable parameters 00087 00088 // if advanced KNN is used 00089 if ( m_enableAdvancedKNN ) 00090 { 00091 paramDoubleValues.push_back ( &m_globalOffset ); 00092 paramDoubleNames.push_back ( "globalOffset" ); 00093 paramDoubleValues.push_back ( &m_scale ); 00094 paramDoubleNames.push_back ( "scale" ); 00095 paramDoubleValues.push_back ( &m_scale2 ); 00096 paramDoubleNames.push_back ( "scale2" ); 00097 paramDoubleValues.push_back ( &m_offset ); 00098 paramDoubleNames.push_back ( "offset" ); 00099 paramDoubleValues.push_back ( &m_power ); 00100 paramDoubleNames.push_back ( "power" ); 00101 } 00102 00103 // standard value k in k-NN, k-best neighbors 00104 paramIntValues.push_back ( &m_k ); 00105 paramIntNames.push_back ( "k" ); 00106 00107 if ( m_takeEuclideanDistance && m_optimizeEuclideanPower ) 00108 { 00109 paramDoubleValues.push_back ( &m_euclideanPower ); 00110 paramDoubleNames.push_back ( "euclideanPower" ); 00111 paramDoubleValues.push_back ( &m_euclideanLimit ); 00112 paramDoubleNames.push_back ( "euclideanLimit" ); 00113 } 00114 00115 // init pointers 00116 if ( m_xNormalized == 0 ) 00117 { 00118 m_xNormalized = new REAL*[m_nCross+1]; 00119 m_targetActualPtr = new REAL*[m_nCross+1]; 00120 m_lengthActual = new int[m_nCross+1]; 00121 for ( int i=0;i<m_nCross+1;i++ ) 00122 { 00123 m_xNormalized[i] = 0; 00124 m_targetActualPtr[i] = 0; 00125 m_lengthActual[i] = m_trainSize[i]; 00126 } 00127 } 00128 }

| void KNearestNeighbor::modelUpdate | ( | REAL * | input, | |

| REAL * | target, | |||

| uint | nSamples, | |||

| uint | crossRun | |||

| ) | [virtual] |

Make a model update, set the new cross validation set or set the whole training set for retraining

KNN prediction model has no training phase KNN finds prototypes in the train dataset based on a similarity measure

Here, pearson correlation are used as distance measure This is equivalent to dot products of z-scores

- Parameters:

-

input Pointer to input (can be cross validation set, or whole training set) (rows x nFeatures) target The targets (can be cross validation targets) nSamples The sample size (rows) in input crossRun The cross validation run (for training)

Implements StandardAlgorithm.

Definition at line 452 of file KNearestNeighbor.cpp.

00453 { 00454 m_targetActualPtr[crossRun] = target; 00455 m_lengthActual[crossRun] = nSamples; 00456 00457 // allocate new memory 00458 if ( m_xNormalized[crossRun] == 0 ) 00459 m_xNormalized[crossRun] = new REAL[nSamples*m_nFeatures]; 00460 00461 // calculate z-score for current train set 00462 for ( int i=0;i<nSamples;i++ ) 00463 { 00464 REAL* ptr0 = m_xNormalized[crossRun] + i*m_nFeatures; 00465 REAL* ptr1 = input + i*m_nFeatures; 00466 00467 // mean, std 00468 REAL mean = 0.0, std = 0.0, denormalized; 00469 for ( int j=0;j<m_nFeatures;j++ ) 00470 { 00471 denormalized = ptr1[j]; 00472 if ( m_dataDenormalization ) 00473 denormalized = denormalized * m_std[j] + m_mean[j]; 00474 mean += denormalized; 00475 } 00476 mean = mean / ( REAL ) m_nFeatures; 00477 if ( m_takeEuclideanDistance ) 00478 mean = 0.0; 00479 for ( int j=0;j<m_nFeatures;j++ ) 00480 { 00481 denormalized = ptr1[j]; 00482 if ( m_dataDenormalization ) 00483 denormalized = denormalized * m_std[j] + m_mean[j]; 00484 std += ( denormalized - mean ) * ( denormalized - mean ); 00485 } 00486 if ( std == 0.0 ) 00487 std = 1.0; 00488 else 00489 std = sqrt ( std / ( REAL ) ( m_nFeatures-1 ) ); 00490 if ( m_takeEuclideanDistance ) 00491 std = 1.0; 00492 for ( int j=0;j<m_nFeatures;j++ ) 00493 { 00494 denormalized = ptr1[j]; 00495 if ( m_dataDenormalization ) 00496 denormalized = denormalized * m_std[j] + m_mean[j]; 00497 ptr0[j] = ( denormalized - mean ) / std; 00498 } 00499 } 00500 }

| void KNearestNeighbor::predictAllOutputs | ( | REAL * | rawInputs, | |

| REAL * | outputs, | |||

| uint | nSamples, | |||

| uint | crossRun | |||

| ) | [virtual] |

Prediction for outside use, predicts outputs based on raw input values

- Parameters:

-

rawInputs The input feature, without normalization (raw) outputs The output value (prediction of target) nSamples The input size (number of rows) crossRun Number of cross validation run (in training)

Implements StandardAlgorithm.

Definition at line 138 of file KNearestNeighbor.cpp.

00139 { 00140 int lengthActual = m_lengthActual[crossRun]; 00141 REAL* targetActualPtr = m_targetActualPtr[crossRun]; 00142 00143 // init outputs 00144 for ( int i=0;i<nSamples;i++ ) 00145 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00146 outputs[i*m_nClass*m_nDomain + j] = 0.0; 00147 00148 REAL* allCorrelations = new REAL[m_evaluationBlockSize * lengthActual]; 00149 REAL* allCorrelationsTransposed = new REAL[m_evaluationBlockSize * lengthActual]; 00150 REAL* euclTmp = new REAL[m_nFeatures]; 00151 REAL* squaredSumInputs = new REAL[m_evaluationBlockSize]; 00152 REAL* squaredSumData = new REAL[lengthActual]; 00153 00154 // divide the prediction into blocks 00155 int sampleOffset = 0; 00156 bool breakLoop = true; 00157 while ( breakLoop ) 00158 { 00159 int blockSize = nSamples - sampleOffset; 00160 if ( blockSize >= m_evaluationBlockSize ) 00161 blockSize = m_evaluationBlockSize; 00162 if ( sampleOffset + blockSize == nSamples ) 00163 breakLoop = false; 00164 00165 // z-score of input 00166 REAL* zScores = new REAL[blockSize*m_nFeatures]; 00167 for ( int i=0;i<blockSize;i++ ) 00168 { 00169 REAL* ptr0 = zScores + i*m_nFeatures; 00170 REAL* ptr1 = rawInputs + ( i+sampleOffset ) *m_nFeatures; 00171 00172 REAL mean = 0.0, std = 0.0, denormalized; 00173 for ( int j=0;j<m_nFeatures;j++ ) 00174 { 00175 denormalized = ptr1[j]; 00176 if ( m_dataDenormalization ) 00177 denormalized = denormalized * m_std[j] + m_mean[j]; 00178 mean += denormalized; 00179 } 00180 mean /= ( REAL ) m_nFeatures; 00181 if ( m_takeEuclideanDistance ) 00182 mean = 0.0; 00183 for ( int j=0;j<m_nFeatures;j++ ) 00184 { 00185 denormalized = ptr1[j]; 00186 if ( m_dataDenormalization ) 00187 denormalized = denormalized * m_std[j] + m_mean[j]; 00188 std += ( denormalized - mean ) * ( denormalized - mean ); 00189 } 00190 std = sqrt ( std / ( REAL ) ( m_nFeatures-1 ) ); 00191 if ( m_takeEuclideanDistance ) 00192 std = 1.0; 00193 for ( int j=0;j<m_nFeatures;j++ ) 00194 { 00195 denormalized = ptr1[j]; 00196 if ( m_dataDenormalization ) 00197 denormalized = denormalized * m_std[j] + m_mean[j]; 00198 ptr0[j] = ( denormalized - mean ) / std; 00199 } 00200 } 00201 00202 // calc all euclidean distances 00203 if ( m_takeEuclideanDistance ) 00204 { 00205 // this code is slower 00206 /*for(int i=0;i<blockSize;i++) 00207 { 00208 REAL* corrPtr = allCorrelationsTransposed + i*lengthActual; 00209 for(int j=0;j<lengthActual;j++) 00210 { 00211 REAL eucl = 0.0; 00212 REAL* ptr0 = m_xNormalized[crossRun] + j*m_nFeatures; // data 00213 REAL* ptr1 = zScores + i*m_nFeatures; // inputs 00214 for(int k=0;k<m_nFeatures;k++) 00215 eucl += (ptr0[k]-ptr1[k])*(ptr0[k]-ptr1[k]); 00216 //corrPtr[j] = 1.0 / (sqrt(eucl) + 1e-9); // <-- THIS IS ORIGINAL 00217 corrPtr[j] = eucl; 00218 } 00219 for(int j=0;j<lengthActual;j++) 00220 corrPtr[j] += 1e-9; 00221 V_POWC(lengthActual, corrPtr, m_euclideanPower, corrPtr); // power=-0.5 results in: 1/sqrt(eucl) 00222 } 00223 */ 00224 00225 // speedup calculation by using BLAS in x'y: ||x-y||^2 = sum(x.^2) - 2 * x'y + sum(y.^2) 00226 for ( uint i=0;i<blockSize;i++ ) 00227 { 00228 double sum = 0.0; 00229 REAL *ptr = zScores + i*m_nFeatures; 00230 for ( uint k=0;k<m_nFeatures;k++ ) 00231 sum += ptr[k] * ptr[k]; 00232 squaredSumInputs[i] = sum; // inputs feature squared sum 00233 } 00234 for ( uint i=0;i<lengthActual;i++ ) 00235 { 00236 double sum = 0.0; 00237 REAL *ptr = m_xNormalized[crossRun] + i*m_nFeatures; 00238 for ( uint k=0;k<m_nFeatures;k++ ) 00239 sum += ptr[k] * ptr[k]; 00240 squaredSumData[i] = sum; // data feature squared sun 00241 } 00242 00243 // allCorrelations = zScores * m_xNormalized[crossRun]' 00244 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, lengthActual, blockSize, m_nFeatures, 1.0, m_xNormalized[crossRun], m_nFeatures, zScores, m_nFeatures, 0.0, allCorrelations, blockSize ); 00245 00246 // transpose to get linear column access 00247 // searching is much faster with linear access: ptr[j] 00248 for ( int i=0;i<lengthActual;i++ ) 00249 for ( int j=0;j<blockSize;j++ ) 00250 allCorrelationsTransposed[i + j*lengthActual] = allCorrelations[i*blockSize + j]; 00251 00252 for ( int i=0;i<blockSize;i++ ) 00253 { 00254 //if(m_evaluationBlockSize < blockSize) 00255 //{ 00256 // cout<<"m_block:"<<m_evaluationBlockSize<<" blockSize:"<<blockSize<<endl; 00257 // assert(false); 00258 //} 00259 REAL* corrPtr = allCorrelationsTransposed + i*lengthActual; 00260 REAL sqSum = squaredSumInputs[i]; 00261 00262 for ( int j=0;j<lengthActual;j++ ) 00263 { 00264 corrPtr[j] = squaredSumData[j] + sqSum - 2.0 * corrPtr[j]; 00265 //corrPtr[j] = corrPtr[j]*corrPtr[j] + m_euclideanLimit; 00266 } 00267 } 00268 00269 V_SQRT ( blockSize*lengthActual, allCorrelationsTransposed, allCorrelationsTransposed ); 00270 for ( uint i=0;i<blockSize*lengthActual;i++ ) 00271 allCorrelationsTransposed[i] += m_euclideanLimit; 00272 V_INV ( blockSize*lengthActual, allCorrelationsTransposed, allCorrelationsTransposed ); 00273 V_POWC ( blockSize*lengthActual, allCorrelationsTransposed, m_euclideanPower, allCorrelationsTransposed ); 00274 } 00275 else 00276 { 00277 // calc exact pearson: product of z-scores 00278 // this is a matrix-matrix multiplication and therefore fast 00279 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, lengthActual, blockSize, m_nFeatures, 1.0, m_xNormalized[crossRun], m_nFeatures, zScores, m_nFeatures, 0.0, allCorrelations, blockSize ); 00280 00281 REAL scale = 1.0 / ( REAL ) ( m_nFeatures ); 00282 V_MULC ( allCorrelations, scale, allCorrelations, blockSize * lengthActual ); 00283 IPPS_THRESHOLD ( allCorrelations, allCorrelations, blockSize * lengthActual, -1.0, ippCmpLess ); // clip negative 00284 IPPS_THRESHOLD ( allCorrelations, allCorrelations, blockSize * lengthActual, +1.0, ippCmpGreater ); // clip positive 00285 00286 // transpose to get linear column access 00287 // searching is much faster with linear access: ptr[j] 00288 for ( int i=0;i<lengthActual;i++ ) 00289 for ( int j=0;j<blockSize;j++ ) 00290 allCorrelationsTransposed[i + j*lengthActual] = allCorrelations[i*blockSize + j]; 00291 } 00292 00293 int* indexKBest = new int[m_k]; 00294 REAL* valueKBest = new REAL[m_k]; 00295 00296 // per input make a k-NN prediction 00297 for ( int i=0;i<blockSize;i++ ) 00298 { 00299 REAL* predictionPtr = outputs + sampleOffset*m_nClass*m_nDomain + i*m_nClass*m_nDomain; 00300 REAL* corrPtr = allCorrelationsTransposed + i*lengthActual; 00301 00302 REAL worstValue = 1e10; 00303 int worstIdx = -1; 00304 int topKLength = 0; 00305 00306 // find k-best (largest) correlations 00307 for ( int j=0;j<lengthActual;j++ ) 00308 { 00309 REAL c = corrPtr[j]; 00310 00311 // initially, fill top-k list 00312 if ( topKLength < m_k ) 00313 { 00314 indexKBest[topKLength] = j; 00315 valueKBest[topKLength] = c; 00316 if ( worstValue > c ) 00317 { 00318 worstValue = c; 00319 worstIdx = topKLength; 00320 } 00321 topKLength++; 00322 } 00323 else if ( c > worstValue ) // check for candidate 00324 { 00325 indexKBest[worstIdx] = j; 00326 valueKBest[worstIdx] = c; 00327 00328 // find the new worst value 00329 worstIdx = -1; 00330 worstValue = 1e10; 00331 for ( int k=0;k<m_k;k++ ) 00332 if ( worstValue > valueKBest[k] ) 00333 { 00334 worstValue = valueKBest[k]; 00335 worstIdx = k; 00336 } 00337 } 00338 } 00339 00340 // calculate k-nn: sum(ci * vi) / sum(|ci|) 00341 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00342 { 00343 REAL sumCV = 0.0, sumC = 0.0, v; 00344 for ( int k=0;k<topKLength;k++ ) 00345 { 00346 int idx = indexKBest[k]; 00347 REAL c = corrPtr[idx]; // * (m_takeEuclideanDistance? -1.0 : 1.0); 00348 if ( c > 1e6 && m_takeEuclideanDistance ) // reject train samples 00349 continue; 00350 REAL v = targetActualPtr[j + idx*m_nClass*m_nDomain]; 00351 00352 // sigmoid mapping function 00353 if ( m_enableAdvancedKNN ) 00354 { 00355 REAL sign = c>0.0? 1.0 : -1.0; 00356 c = pow ( fabs ( c ), ( float ) m_power ) * sign; 00357 c = m_scale2 * 1.0 / ( 1.0 + exp ( - ( m_scale * c - m_offset ) ) ) + m_globalOffset; 00358 } 00359 00360 sumCV += c * v; 00361 sumC += fabs ( c ); 00362 } 00363 v = sumCV / sumC; 00364 if ( isnan ( v ) || isinf ( v ) ) 00365 v = 0.0; 00366 predictionPtr[j] = v; 00367 } 00368 00369 /* 00370 // OLD (slower search of nearest neighbors) 00371 // calculate k-nn: sum(ci * vi) / sum(|ci|) 00372 REAL* predictionPtr = outputs + sampleOffset*m_nClass*m_nDomain + i*m_nClass*m_nDomain; 00373 REAL sumC = 0.0; 00374 int k = 0; 00375 while(k<m_k) 00376 { 00377 // find max. correlation 00378 int indMax = -1; 00379 REAL max = -1e10; 00380 REAL* corrPtr = allCorrelationsTransposed + i*lengthActual; 00381 for(int j=0;j<lengthActual;j++) 00382 { 00383 if(max < corrPtr[j]) 00384 { 00385 max = corrPtr[j]; 00386 indMax = j; 00387 } 00388 } 00389 00390 if(indMax == -1) 00391 break; 00392 00393 // sigmoid mapping function 00394 if(m_enableAdvancedKNN) 00395 { 00396 REAL sign = max>0.0? 1.0 : -1.0; 00397 max = pow(fabs(max), (float)m_power) * sign; 00398 max = m_scale2 * 1.0 / (1.0 + exp(- (m_scale * max - m_offset) ) ) + m_globalOffset; 00399 } 00400 00401 REAL sign = 1.0; 00402 //if(m_takeEuclideanDistance) 00403 // sign = -1.0; 00404 for(int j=0;j<m_nClass*m_nDomain;j++) 00405 predictionPtr[j] += max * targetActualPtr[j + indMax*m_nClass*m_nDomain] * sign; 00406 00407 // clear best 00408 corrPtr[indMax] = -1e10; 00409 00410 // denomiator: sum of |correlation| 00411 sumC += fabs(max); 00412 k++; 00413 } 00414 if(sumC > 1e-6) 00415 for(int j=0;j<m_nClass*m_nDomain;j++) 00416 predictionPtr[j] /= sumC; 00417 */ 00418 } 00419 00420 sampleOffset += blockSize; 00421 00422 delete[] indexKBest; 00423 delete[] valueKBest; 00424 00425 if ( zScores ) 00426 delete[] zScores; 00427 zScores = 0; 00428 } 00429 00430 delete[] allCorrelations; 00431 delete[] allCorrelationsTransposed; 00432 delete[] euclTmp; 00433 delete[] squaredSumInputs; 00434 delete[] squaredSumData; 00435 }

| void KNearestNeighbor::readSpecificMaps | ( | ) | [virtual] |

Read the Algorithm specific values from the description file

Implements StandardAlgorithm.

Definition at line 60 of file KNearestNeighbor.cpp.

00061 { 00062 cout<<"Read specific maps"<<endl; 00063 00064 // read dsc vars 00065 m_evaluationBlockSize = m_intMap["evaluationBlockSize"]; 00066 m_k = m_intMap["kInit"]; 00067 m_euclideanPower = m_doubleMap["euclideanPower"]; 00068 m_euclideanLimit = m_doubleMap["euclideanLimit"]; 00069 m_enableAdvancedKNN = m_boolMap["enableAdvancedKNN"]; 00070 m_takeEuclideanDistance = m_boolMap["takeEuclideanDistance"]; 00071 m_dataDenormalization = m_boolMap["dataDenormalization"]; 00072 m_optimizeEuclideanPower = m_boolMap["optimizeEuclideanPower"]; 00073 m_scale = m_doubleMap["initScale"]; 00074 m_scale2 = m_doubleMap["initScale2"]; 00075 m_offset = m_doubleMap["initOffset"]; 00076 m_globalOffset = m_doubleMap["initGlobalOffset"]; 00077 m_power = m_doubleMap["initPower"]; 00078 }

| void KNearestNeighbor::saveWeights | ( | int | cross | ) | [virtual] |

Save the weights and all other parameters for loading the complete prediction model

Implements StandardAlgorithm.

Definition at line 506 of file KNearestNeighbor.cpp.

00507 { 00508 char buf[1024]; 00509 sprintf ( buf,"%02d",cross ); 00510 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile + "." + buf; 00511 if ( m_inRetraining ) 00512 cout<<"Save:"<<name<<endl; 00513 fstream f ( name.c_str(), ios::out ); 00514 f.write ( ( char* ) &m_nTrain, sizeof ( int ) ); 00515 f.write ( ( char* ) &m_nFeatures, sizeof ( int ) ); 00516 f.write ( ( char* ) &m_nClass, sizeof ( int ) ); 00517 f.write ( ( char* ) &m_nDomain, sizeof ( int ) ); 00518 f.write ( ( char* ) &m_lengthActual[cross], sizeof ( int ) ); 00519 f.write ( ( char* ) m_xNormalized[cross], sizeof ( REAL ) *m_lengthActual[cross]*m_nFeatures ); 00520 f.write ( ( char* ) m_targetActualPtr[cross], sizeof ( REAL ) *m_lengthActual[cross]*m_nClass*m_nDomain ); 00521 f.write ( ( char* ) &m_scale, sizeof ( double ) ); 00522 f.write ( ( char* ) &m_scale2, sizeof ( double ) ); 00523 f.write ( ( char* ) &m_offset, sizeof ( double ) ); 00524 f.write ( ( char* ) &m_globalOffset, sizeof ( double ) ); 00525 f.write ( ( char* ) &m_k, sizeof ( int ) ); 00526 f.write ( ( char* ) &m_euclideanPower, sizeof ( double ) ); 00527 f.write ( ( char* ) &m_euclideanLimit, sizeof ( double ) ); 00528 f.write ( ( char* ) &m_maxSwing, sizeof ( double ) ); 00529 f.close(); 00530 }

| string KNearestNeighbor::templateGenerator | ( | int | id, | |

| string | preEffect, | |||

| int | nameID, | |||

| bool | blendStop | |||

| ) | [static] |

Generates a template of the description file

- Returns:

- The template string

Definition at line 623 of file KNearestNeighbor.cpp.

00624 { 00625 stringstream s; 00626 s<<"ALGORITHM=KNearestNeighbor"<<endl; 00627 s<<"ID="<<id<<endl; 00628 s<<"TRAIN_ON_FULLPREDICTOR="<<preEffect<<endl; 00629 s<<"DISABLE=0"<<endl; 00630 s<<endl; 00631 s<<"[int]"<<endl; 00632 s<<"kInit=10"<<endl; 00633 s<<"maxTuninigEpochs=10"<<endl; 00634 s<<"evaluationBlockSize=1000"<<endl; 00635 s<<endl; 00636 s<<"[double]"<<endl; 00637 s<<"initMaxSwing=1.0"<<endl; 00638 s<<"initScale=5.0"<<endl; 00639 s<<"initScale2=1.0"<<endl; 00640 s<<"initOffset=2.0"<<endl; 00641 s<<"initGlobalOffset=-0.05"<<endl; 00642 s<<"initPower=1.0"<<endl; 00643 s<<"euclideanPower=1.0"<<endl; 00644 s<<"euclideanLimit=0.01"<<endl; 00645 s<<endl; 00646 s<<"[bool]"<<endl; 00647 s<<"takeEuclideanDistance=1"<<endl; 00648 s<<"dataDenormalization=0"<<endl; 00649 s<<"optimizeEuclideanPower=0"<<endl; 00650 s<<endl; 00651 s<<"enableAdvancedKNN=0"<<endl; 00652 s<<endl; 00653 s<<"enableClipping=1"<<endl; 00654 s<<"enableTuneSwing=0"<<endl; 00655 s<<endl; 00656 s<<"minimzeProbe="<< ( !blendStop ) <<endl; 00657 s<<"minimzeProbeClassificationError=0"<<endl; 00658 s<<"minimzeBlend="<<blendStop<<endl; 00659 s<<"minimzeBlendClassificationError=0"<<endl; 00660 s<<endl; 00661 s<<"[string]"<<endl; 00662 s<<"weightFile=KNearestNeighbor_"<<nameID<<"_weights.dat"<<endl; 00663 s<<"fullPrediction=KNearestNeighbor_"<<nameID<<".dat"<<endl; 00664 00665 return s.str(); 00666 }

The documentation for this class was generated from the following files:

1.5.8

1.5.8