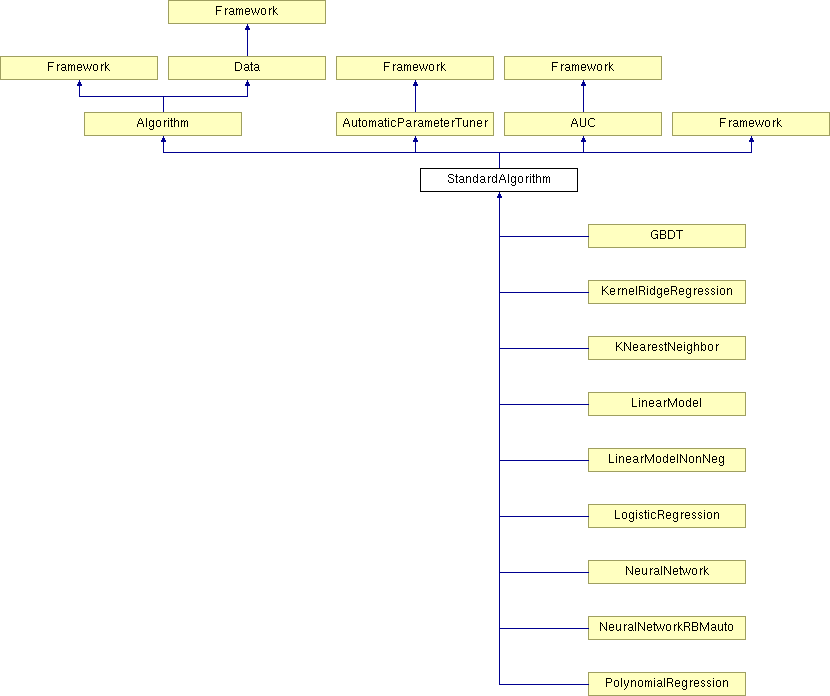

StandardAlgorithm Class Reference

#include <StandardAlgorithm.h>

Public Member Functions | |

| StandardAlgorithm () | |

| ~StandardAlgorithm () | |

| virtual double | calcRMSEonProbe () |

| virtual double | calcRMSEonBlend () |

| void | saveBestPrediction () |

| virtual void | setPredictionMode (int cross) |

| virtual double | train () |

| virtual void | predictMultipleOutputs (REAL *rawInput, REAL *effect, REAL *output, int *label, int nSamples, int crossRun) |

| virtual void | modelInit ()=0 |

| virtual void | modelUpdate (REAL *input, REAL *target, uint nSamples, uint crossRun)=0 |

| virtual void | predictAllOutputs (REAL *rawInputs, REAL *outputs, uint nSamples, uint crossRun)=0 |

| virtual void | readSpecificMaps ()=0 |

| virtual void | saveWeights (int cross)=0 |

| virtual void | loadWeights (int cross)=0 |

| virtual void | loadMetaWeights (int cross)=0 |

Protected Member Functions | |

| void | init () |

| void | readMaps () |

| void | calculateFullPrediction () |

| void | writeFullPrediction (int nSamples) |

Protected Attributes | |

| BlendStopping * | m_blendStop |

| vector< int * > | paramEpochValues |

| vector< string > | paramEpochNames |

| vector< double * > | paramDoubleValues |

| vector< string > | paramDoubleNames |

| vector< int * > | paramIntValues |

| vector< string > | paramIntNames |

| double | m_maxSwing |

| REAL * | m_crossValidationPrediction |

| REAL * | m_prediction |

| REAL * | m_predictionBest |

| REAL ** | m_predictionProbe |

| REAL * | m_singlePrediction |

| int * | m_labelPrediction |

| int * | m_wrongLabelCnt |

| REAL * | m_outOfBagEstimate |

| int * | m_outOfBagEstimateCnt |

| int | m_maxTuninigEpochs |

| int | m_minTuninigEpochs |

| bool | m_enableClipping |

| bool | m_enableTuneSwing |

| bool | m_minimzeProbe |

| bool | m_minimzeProbeClassificationError |

| bool | m_minimzeBlend |

| bool | m_minimzeBlendClassificationError |

| double | m_initMaxSwing |

| string | m_weightFile |

| string | m_fullPrediction |

Detailed Description

Base class for a standard algorithm This is not a stand-alone class Other algorithms must be derived from this class (which unites the requirements of a StandardAlgorithms)Definition at line 23 of file StandardAlgorithm.h.

Constructor & Destructor Documentation

| StandardAlgorithm::StandardAlgorithm | ( | ) |

Constructor

Definition at line 8 of file StandardAlgorithm.cpp.

00009 { 00010 cout<<"StandardAlgorithm"<<endl; 00011 // init member vars 00012 m_blendStop = 0; 00013 m_maxSwing = 0; 00014 m_crossValidationPrediction = 0; 00015 m_prediction = 0; 00016 m_predictionBest = 0; 00017 m_predictionProbe = 0; 00018 m_singlePrediction = 0; 00019 m_labelPrediction = 0; 00020 m_wrongLabelCnt = 0; 00021 m_maxTuninigEpochs = 0; 00022 m_minTuninigEpochs = 0; 00023 m_enableClipping = 0; 00024 m_enableTuneSwing = 0; 00025 m_minimzeProbe = 0; 00026 m_minimzeProbeClassificationError = 0; 00027 m_minimzeBlend = 0; 00028 m_minimzeBlendClassificationError = 0; 00029 m_initMaxSwing = 0; 00030 m_outOfBagEstimate = 0; 00031 m_outOfBagEstimateCnt = 0; 00032 00033 }

| StandardAlgorithm::~StandardAlgorithm | ( | ) |

Destructor

Definition at line 38 of file StandardAlgorithm.cpp.

00039 { 00040 cout<<"descructor StandardAlgorithm"<<endl; 00041 00042 if ( m_blendStop ) 00043 delete m_blendStop; 00044 m_blendStop = 0; 00045 00046 if ( m_prediction ) 00047 delete[] m_prediction; 00048 m_prediction = 0; 00049 if ( m_predictionBest ) 00050 delete[] m_predictionBest; 00051 m_predictionBest = 0; 00052 for ( int i=0;i<m_maxThreadsInCross;i++ ) 00053 { 00054 if ( m_predictionProbe ) 00055 { 00056 if ( m_predictionProbe[i] ) 00057 delete[] m_predictionProbe[i]; 00058 m_predictionProbe[i] = 0; 00059 } 00060 } 00061 if ( m_predictionProbe ) 00062 delete[] m_predictionProbe; 00063 m_predictionProbe = 0; 00064 if ( m_labelPrediction ) 00065 delete[] m_labelPrediction; 00066 m_labelPrediction = 0; 00067 if ( m_singlePrediction ) 00068 delete[] m_singlePrediction; 00069 m_singlePrediction = 0; 00070 00071 if ( m_crossValidationPrediction ) 00072 delete[] m_crossValidationPrediction; 00073 m_crossValidationPrediction = 0; 00074 if ( m_wrongLabelCnt ) 00075 delete[] m_wrongLabelCnt; 00076 m_wrongLabelCnt = 0; 00077 if(m_outOfBagEstimate) 00078 delete[] m_outOfBagEstimate; 00079 m_outOfBagEstimate = 0; 00080 if(m_outOfBagEstimateCnt) 00081 delete[] m_outOfBagEstimateCnt; 00082 m_outOfBagEstimateCnt = 0; 00083 }

Member Function Documentation

| double StandardAlgorithm::calcRMSEonBlend | ( | ) | [virtual] |

Calculate the RMSE of the ensemble (with constant 1 prediction)

Implements Algorithm.

Definition at line 523 of file StandardAlgorithm.cpp.

00524 { 00525 double rmse = calcRMSEonProbe(); 00526 cout<<" [probe:"<<rmse<<"] "; 00527 double rmseBlend = m_blendStop->calcBlending(); 00528 if ( m_minimzeBlendClassificationError ) 00529 return m_blendStop->getClassificationError(); 00530 return rmseBlend; 00531 }

| double StandardAlgorithm::calcRMSEonProbe | ( | ) | [virtual] |

Calculate the RMSE on all probe sets with cross validation

- Returns:

- RMSE on cross validation probesets (=complete trainset)

Implements Algorithm.

Definition at line 233 of file StandardAlgorithm.cpp.

00234 { 00235 double rmse = 0.0, mae = 0.0; 00236 int nThreads = m_maxThreadsInCross; // get #available threads 00237 for ( int d=0;d<m_nDomain;d++ ) 00238 m_wrongLabelCnt[d] = 0; 00239 00240 if(m_validationType == "ValidationSet") 00241 { 00242 // train the model on the train set 00243 modelUpdate ( m_trainOrig, m_trainTargetOrig, m_nTrain, 0 ); 00244 00245 // predict the validation set 00246 REAL* effect = new REAL[m_validSize * m_nClass * m_nDomain]; 00247 for(uint i=0;i<m_validSize * m_nClass * m_nDomain;i++) 00248 effect[i] = 0.0; 00249 predictMultipleOutputs ( m_valid, effect, m_prediction, m_labelPrediction, m_validSize, 0 ); 00250 delete[] effect; 00251 00252 // calculate the error on the validation set 00253 for ( int i=0;i<m_validSize;i++) 00254 { 00255 // copy to blending vector and to internal prediction 00256 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00257 { 00258 REAL prediction = m_prediction[i*m_nClass*m_nDomain + j]; 00259 m_blendStop->m_newPrediction[j][i] = prediction; 00260 m_prediction[i*m_nClass*m_nDomain + j] = prediction; 00261 00262 rmse += ( prediction - m_validTarget[i*m_nClass*m_nDomain + j] ) * ( prediction - m_validTarget[i*m_nClass*m_nDomain + j] ); 00263 mae += fabs ( prediction - m_validTarget[i*m_nClass*m_nDomain + j] ); 00264 } 00265 00266 // count wrong labeled examples 00267 if ( Framework::getDatasetType() ) 00268 { 00269 for ( int d=0;d<m_nDomain;d++ ) 00270 if ( m_labelPrediction[d+i*m_nDomain] != m_validLabel[d+i*m_nDomain] ) 00271 m_wrongLabelCnt[d]++; 00272 } 00273 } 00274 00275 // print the classification error rate 00276 double classificationError = 1.0; 00277 if ( Framework::getDatasetType() ) 00278 { 00279 int nWrong = 0; 00280 for ( int d=0;d<m_nDomain;d++ ) 00281 { 00282 nWrong += m_wrongLabelCnt[d]; 00283 //if(m_nDomain > 1) 00284 // cout<<"["<<(double)m_wrongLabelCnt[d]/(double)m_validSize<<"] "; 00285 } 00286 classificationError = ( double ) nWrong/ ( ( double ) m_validSize*m_nDomain ); 00287 cout<<" [classErr:"<<100.0*classificationError<<"%] "; 00288 } 00289 if ( m_minimzeProbeClassificationError ) 00290 return classificationError; 00291 00292 rmse = sqrt ( rmse/ ( ( double ) m_validSize * ( double ) m_nClass * ( double ) m_nDomain ) ); 00293 mae = mae/ ( ( double ) m_validSize * ( double ) m_nClass * ( double ) m_nDomain ); 00294 00295 if ( m_errorFunction=="MAE" ) 00296 return mae; 00297 else if ( m_errorFunction=="AUC" && Framework::getDatasetType() ) 00298 { 00299 cout<<"[rmse:"<<rmse<<"]"<<flush; 00300 00301 // calc area under curve 00302 REAL* tmp = new REAL[m_validSize*m_nClass*m_nDomain]; 00303 for ( int i=0;i<m_validSize;i++ ) 00304 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00305 tmp[i + j*m_validSize] = m_prediction[j + i*m_nClass*m_nDomain]; 00306 REAL auc = getAUC ( tmp, m_validLabel, m_nClass, m_nDomain, m_validSize ); 00307 delete[] tmp; 00308 return auc; 00309 } 00310 00311 return rmse; 00312 } 00313 00314 if(m_validationType == "Bagging") 00315 { 00316 for(int i=0;i<m_nTrain * m_nClass * m_nDomain;i++) 00317 m_outOfBagEstimate[i] = 0.0; 00318 for(int i=0;i<m_nTrain;i++) 00319 m_outOfBagEstimateCnt[i] = 0; 00320 for(int i=0;i<m_nTrain;i++) 00321 for(int j=0;j<m_nClass * m_nDomain;j++) 00322 m_prediction[i*m_nClass*m_nDomain+j] = m_targetMean[j]; // some of the out-of-bag estimates are not predicted 00323 } 00324 00325 for ( int i=0;i<m_nCross;i+=nThreads ) // all cross validation sets 00326 { 00327 // predict the probeset 00328 int* nSamples = new int[nThreads]; 00329 int** labels = new int*[nThreads]; 00330 for ( int j=0;j<nThreads;j++ ) 00331 { 00332 nSamples[j] = m_probeSize[i+j]; 00333 labels[j] = new int[nSamples[j]*m_nDomain]; 00334 } 00335 00336 if ( nThreads > 1 ) 00337 { 00338 // parallel training of the cross-validation sets with OPENMP 00339 #pragma omp parallel for 00340 for ( int t=0;t<nThreads;t++ ) 00341 { 00342 cout<<"."<<flush; 00343 if ( m_enableSaveMemory ) 00344 fillNCrossValidationSet ( i+t ); 00345 modelUpdate ( m_train[i+t], m_trainTargetResidual[i+t], m_trainSize[i+t], i+t ); 00346 predictMultipleOutputs ( m_probe[i+t], m_probeTargetEffect[i+t], m_predictionProbe[t], labels[t], nSamples[t], i+t ); 00347 if ( m_enableSaveMemory ) 00348 freeNCrossValidationSet ( i+t ); 00349 } 00350 } 00351 else 00352 { 00353 cout<<"."<<flush; 00354 if ( m_enableSaveMemory ) 00355 fillNCrossValidationSet ( i ); 00356 modelUpdate ( m_train[i], m_trainTargetResidual[i], m_trainSize[i], i ); 00357 predictMultipleOutputs ( m_probe[i], m_probeTargetEffect[i], m_predictionProbe[0], labels[0], nSamples[0], i ); 00358 if ( m_enableSaveMemory ) 00359 freeNCrossValidationSet ( i ); 00360 } 00361 00362 // merge the probe predictions 00363 if(m_validationType == "Bagging") 00364 { 00365 for ( int thread=0;thread<nThreads;thread++ ) 00366 { 00367 for ( int j=0;j<nSamples[thread];j++ ) 00368 { 00369 int idx = m_probeIndex[i+thread][j]; 00370 for(int k=0;k<m_nClass * m_nDomain;k++) 00371 { 00372 REAL prediction = m_predictionProbe[thread][j*m_nClass*m_nDomain + k]; 00373 m_outOfBagEstimate[idx*m_nClass*m_nDomain + k] += prediction; 00374 } 00375 m_outOfBagEstimateCnt[idx]++; 00376 } 00377 } 00378 } 00379 else 00380 { 00381 for ( int thread=0;thread<nThreads;thread++ ) 00382 { 00383 for ( int j=0;j<nSamples[thread];j++ ) // for all samples in this set 00384 { 00385 int idx = m_probeIndex[i+thread][j]; 00386 00387 // copy to blending vector and to internal prediction 00388 for ( int k=0;k<m_nClass*m_nDomain;k++ ) 00389 { 00390 REAL prediction = m_predictionProbe[thread][j*m_nClass*m_nDomain + k]; 00391 m_blendStop->m_newPrediction[k][idx] = prediction; 00392 m_prediction[idx*m_nClass*m_nDomain + k] = prediction; 00393 rmse += ( prediction - m_probeTarget[i+thread][m_nClass*m_nDomain*j + k] ) * ( prediction - m_probeTarget[i+thread][m_nClass*m_nDomain*j + k] ); 00394 mae += fabs ( prediction - m_probeTarget[i+thread][m_nClass*m_nDomain*j + k] ); 00395 } 00396 00397 // count wrong labeled examples 00398 if ( Framework::getDatasetType() ) 00399 { 00400 for ( int d=0;d<m_nDomain;d++ ) 00401 if ( labels[thread][d+j*m_nDomain] != m_probeLabel[i+thread][d+j*m_nDomain] ) 00402 m_wrongLabelCnt[d]++; 00403 } 00404 } 00405 } 00406 } 00407 00408 // free memory 00409 for ( int j=0;j<nThreads;j++ ) 00410 { 00411 if ( labels[j] ) 00412 delete[] labels[j]; 00413 labels[j] = 0; 00414 } 00415 if ( nSamples ) 00416 delete[] nSamples; 00417 nSamples = 0; 00418 if ( labels ) 00419 delete[] labels; 00420 labels = 0; 00421 00422 } 00423 00424 if(m_validationType == "Bagging") 00425 { 00426 for(int i=0;i<m_nTrain;i++) 00427 { 00428 int c = m_outOfBagEstimateCnt[i]; 00429 for(int j=0;j<m_nClass*m_nDomain;j++) 00430 m_prediction[i*m_nClass*m_nDomain+j] = (c==0 ? m_targetMean[j] : (m_outOfBagEstimate[i*m_nClass*m_nDomain+j] / (REAL)c)); 00431 00432 for(int j=0;j<m_nClass*m_nDomain;j++) 00433 { 00434 REAL prediction = m_prediction[i*m_nClass*m_nDomain+j]; 00435 m_blendStop->m_newPrediction[j][i] = prediction; 00436 rmse += ( prediction - m_trainTargetOrig[m_nClass*m_nDomain*i + j] ) * ( prediction - m_trainTargetOrig[m_nClass*m_nDomain*i + j] ); 00437 mae += fabs ( prediction - m_trainTargetOrig[m_nClass*m_nDomain*i + j] ); 00438 } 00439 00440 // count wrong labeled examples 00441 if ( Framework::getDatasetType() ) 00442 { 00443 for(int j=0;j<m_nDomain;j++) 00444 { 00445 int indBest = -1; 00446 REAL max = -1e10; 00447 for(int k=0;k<m_nClass;k++) 00448 { 00449 if(max < m_prediction[i*m_nClass + j*m_nClass + k]) 00450 { 00451 max = m_prediction[i*m_nClass + j*m_nClass + k]; 00452 indBest = k; 00453 } 00454 } 00455 if(indBest != m_trainLabelOrig[i+j*m_nClass]) 00456 m_wrongLabelCnt[j]++; 00457 } 00458 } 00459 } 00460 } 00461 00462 // print the classification error rate 00463 double classificationError = 1.0; 00464 if ( Framework::getDatasetType() ) 00465 { 00466 int nWrong = 0; 00467 for ( int d=0;d<m_nDomain;d++ ) 00468 { 00469 nWrong += m_wrongLabelCnt[d]; 00470 //if(m_nDomain > 1) 00471 // cout<<"["<<(double)m_wrongLabelCnt[d]/(double)m_nTrain<<"] "; 00472 } 00473 classificationError = ( double ) nWrong/ ( ( double ) m_nTrain*m_nDomain ); 00474 cout<<" [classErr:"<<100.0*classificationError<<"%] "; 00475 } 00476 if ( m_minimzeProbeClassificationError ) 00477 return classificationError; 00478 00479 rmse = sqrt ( rmse/ ( ( double ) m_nTrain * ( double ) m_nClass * ( double ) m_nDomain ) ); 00480 mae = mae/ ( ( double ) m_nTrain * ( double ) m_nClass * ( double ) m_nDomain ); 00481 00482 if ( m_errorFunction=="MAE" ) 00483 return mae; 00484 else if ( m_errorFunction=="AUC" && Framework::getDatasetType() ) 00485 { 00486 cout<<"[rmse:"<<rmse<<"]"<<flush; 00487 00488 // calc area under curve 00489 REAL* tmp = new REAL[m_nTrain*m_nClass*m_nDomain]; 00490 for ( int i=0;i<m_nTrain;i++ ) 00491 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00492 tmp[i + j*m_nTrain] = m_prediction[j + i*m_nClass*m_nDomain]; 00493 REAL auc = getAUC ( tmp, m_trainLabelOrig, m_nClass, m_nDomain, m_nTrain ); 00494 delete[] tmp; 00495 return auc; 00496 } 00497 00498 return rmse; 00499 }

| void StandardAlgorithm::calculateFullPrediction | ( | ) | [protected] |

Make the steps to calculate the full-prediction This means to write the k-fold cross validation for the training set Furthermore, here the model gets retrained with all available data and best meta-parameters

Definition at line 641 of file StandardAlgorithm.cpp.

00642 { 00643 double rmse = 0.0; 00644 cout<<endl<<"Calculate FullPrediction (write the prediction of the trainingset with cross validation)"<<endl<<endl; 00645 00646 // re-calculate the blending weights (necessary for minimize only probe) 00647 if ( m_minimzeProbe ) 00648 { 00649 double rmseBlend = m_blendStop->calcBlending(); 00650 m_blendStop->saveTmpBestWeights(); 00651 cout<<"rmseBlend:"<<rmseBlend<<endl; 00652 } 00653 00654 // save linear blending weights 00655 m_blendStop->saveBlendingWeights ( m_datasetPath + "/" + m_tempPath, true ); 00656 cout<<endl; 00657 00658 memcpy ( m_prediction, m_predictionBest, sizeof ( REAL ) * (m_validationType == "ValidationSet"?m_validSize:m_nTrain) * m_nClass * m_nDomain ); 00659 writeFullPrediction(m_validationType == "ValidationSet"?m_validSize:m_nTrain); 00660 00661 m_inRetraining = true; 00662 00663 if ( m_validationType == "Retraining" ) 00664 { 00665 cout<<"Validation type: Retraining"<<endl; 00666 cout<<"Update model on whole training set"<<endl<<endl; 00667 time_t retrainTime = time ( 0 ); 00668 00669 // retrain the model with whole trainingset (disable cross validation) 00670 if ( m_enableSaveMemory ) 00671 fillNCrossValidationSet ( m_nCross ); 00672 00673 // tmp variables, used for bagging 00674 REAL* trainOrig = 0; 00675 REAL* targetOrig = 0; 00676 REAL* targetEffectOrig = 0; 00677 REAL* targetResidualOrig = 0; 00678 int* labelOrig = 0; 00679 00680 if ( m_enableBagging ) 00681 { 00682 cout<<"Save orig data, create boostrap sample for retraining"<<endl; 00683 trainOrig = m_train[m_nCross]; 00684 targetOrig = m_trainTarget[m_nCross]; 00685 targetEffectOrig = m_trainTargetEffect[m_nCross]; 00686 targetResidualOrig = m_trainTargetResidual[m_nCross]; 00687 labelOrig = m_trainLabel[m_nCross]; 00688 doBootstrapSampling ( 0, m_train[m_nCross], m_trainTarget[m_nCross], m_trainTargetEffect[m_nCross], m_trainTargetResidual[m_nCross], m_trainLabel[m_nCross] ); // bootstrap sample 00689 //doBootstrapSampling(0, m_train[m_nCross], m_trainTarget[m_nCross], m_trainTargetEffect[m_nCross], m_trainTargetResidual[m_nCross], m_trainLabel[m_nCross], m_nTrain * 0.8); 00690 } 00691 00692 modelUpdate ( m_train[m_nCross], m_trainTargetResidual[m_nCross], m_nTrain, m_nCross ); 00693 saveWeights ( m_nCross ); 00694 00695 // calc retrain rmse 00696 cout<<"Calculate retrain RMSE (on trainset)"<<endl; 00697 rmse = 0.0; 00698 memset ( m_prediction, 0, sizeof ( REAL ) *m_nTrain*m_nClass*m_nDomain ); 00699 predictMultipleOutputs ( m_train[m_nCross], m_trainTargetEffect[m_nCross], m_prediction, m_labelPrediction, m_nTrain, m_nCross ); 00700 for ( int i=0;i<m_nTrain*m_nClass*m_nDomain;i++ ) 00701 rmse += ( m_prediction[i] - m_trainTarget[m_nCross][i] ) * ( m_prediction[i] - m_trainTarget[m_nCross][i] ); 00702 rmse = sqrt ( rmse/ ( double ) ( m_nTrain * m_nClass * m_nDomain ) ); 00703 cout<<"Train of this algorithm (RMSE after retraining): "<<rmse<<endl; 00704 00705 if ( m_enableBagging ) 00706 { 00707 cout<<"Restore orig data"<<endl; 00708 if ( m_train[m_nCross] ) 00709 delete[] m_train[m_nCross]; 00710 if ( m_trainTarget[m_nCross] ) 00711 delete[] m_trainTarget[m_nCross]; 00712 if ( m_trainTargetEffect[m_nCross] ) 00713 delete[] m_trainTargetEffect[m_nCross]; 00714 if ( m_trainTargetResidual[m_nCross] ) 00715 delete[] m_trainTargetResidual[m_nCross]; 00716 if ( m_trainLabel[m_nCross] ) 00717 delete[] m_trainLabel[m_nCross]; 00718 m_train[m_nCross] = trainOrig; 00719 m_trainTarget[m_nCross] = targetOrig; 00720 m_trainTargetEffect[m_nCross] = targetEffectOrig; 00721 m_trainTargetResidual[m_nCross] = targetResidualOrig; 00722 m_trainLabel[m_nCross] = labelOrig; 00723 } 00724 00725 if ( m_enableSaveMemory ) 00726 freeNCrossValidationSet ( m_nCross ); 00727 00728 cout<<"Total retrain time:"<<time ( 0 )-retrainTime<<"[s]"<<endl; 00729 } 00730 /*else if(m_validationType == "CrossFoldMean" || m_validationType == "Bagging") 00731 { 00732 cout<<"Validation type: "<<m_validationType<<endl; 00733 for(int i=0;i<m_nCross;i++) 00734 saveWeights(i); 00735 } 00736 else 00737 assert(false); 00738 */ 00739 00740 // print summary 00741 cout<<endl<<"==========================================================================="<<endl; 00742 BlendStopping bb ( this, m_fullPrediction ); 00743 bb.setRegularization ( m_blendingRegularization ); 00744 if ( m_datasetName=="NETFLIX" && Framework::getAdditionalStartupParameter() >= 0 ) 00745 { 00746 cout<<"Dataset:NETFLIX, slot:"<<Framework::getAdditionalStartupParameter() <<" "; 00747 char buf[512]; 00748 sprintf ( buf,"p%d",Framework::getAdditionalStartupParameter() ); 00749 string pName = string ( NETFLIX_SLOTDATA_ROOT_DIR ) + buf + "/trainPrediction.data"; 00750 cout<<"pName:"<<pName<<endl; 00751 rmse = bb.calcBlending ( ( char* ) pName.c_str() ); 00752 } 00753 else 00754 rmse = bb.calcBlending ( ( char* ) ( m_datasetPath + "/" + m_tempPath + "/trainPrediction.data" ).c_str() ); 00755 bb.saveBlendingWeights ( m_datasetPath + "/" + m_tempPath ); 00756 cout<<endl<<"BLEND RMSE OF ACTUAL FULLPREDICTION PATH:"<<rmse<<endl; 00757 cout<<"==========================================================================="<<endl<<endl; 00758 00759 }

| void StandardAlgorithm::init | ( | ) | [protected] |

Read the values from the dsc file

Definition at line 184 of file StandardAlgorithm.cpp.

00185 { 00186 cout<<"Init standard algorithm"<<endl; 00187 00188 // read standard and specific values 00189 readMaps(); 00190 readSpecificMaps(); 00191 00192 if ( m_blendStop == 0 ) 00193 { 00194 // init blendStop data 00195 m_blendStop = new BlendStopping ( this, "tune" ); 00196 m_blendStop->setRegularization ( m_blendingRegularization ); 00197 00198 m_wrongLabelCnt = new int[m_nDomain]; 00199 m_singlePrediction = new REAL[m_nClass * m_nDomain]; 00200 00201 if(m_validationType == "ValidationSet") 00202 { 00203 m_prediction = new REAL[m_validSize * m_nClass * m_nDomain]; 00204 m_predictionBest = new REAL[m_validSize * m_nClass * m_nDomain]; 00205 m_labelPrediction = new int[m_validSize * m_nDomain]; 00206 return; 00207 } 00208 00209 // prediction on trainset for all classes 00210 m_prediction = new REAL[m_nTrain * m_nClass * m_nDomain]; 00211 m_predictionBest = new REAL[m_nTrain * m_nClass * m_nDomain]; 00212 m_predictionProbe = new REAL*[m_maxThreadsInCross]; 00213 for ( int i=0;i<m_maxThreadsInCross;i++ ) 00214 m_predictionProbe[i] = new REAL[m_nTrain * m_nClass * m_nDomain]; 00215 m_labelPrediction = new int[m_nTrain * m_nDomain]; 00216 00217 // cross validation training 00218 m_crossValidationPrediction = new REAL[m_nTrain*m_nClass*m_nDomain]; 00219 00220 if(m_validationType == "Bagging") 00221 { 00222 m_outOfBagEstimate = new REAL[m_nTrain * m_nClass * m_nDomain]; 00223 m_outOfBagEstimateCnt = new int[m_nTrain]; 00224 } 00225 } 00226 }

| void StandardAlgorithm::predictMultipleOutputs | ( | REAL * | rawInput, | |

| REAL * | effect, | |||

| REAL * | output, | |||

| int * | labels, | |||

| int | nSamples, | |||

| int | crossRun | |||

| ) | [virtual] |

Predict multiple samples, based on a effect (output from an other Algorithm)

- Parameters:

-

rawInput Raw input vectors (features) effect Result from a preprocessor (other Algorithm output) output Outputs are stored here labels Predicted labels are stored here nSamples Number of samples to predict

Implements Algorithm.

Definition at line 554 of file StandardAlgorithm.cpp.

00555 { 00556 // model prediction 00557 predictAllOutputs ( rawInput, output, nSamples, crossRun ); 00558 00559 if ( m_enableTuneSwing ) // clip the output (clip swing) 00560 { 00561 IPPS_THRESHOLD ( output, output, nSamples*m_nClass*m_nDomain, -m_maxSwing, ippCmpLess ); // clip negative 00562 IPPS_THRESHOLD ( output, output, nSamples*m_nClass*m_nDomain, +m_maxSwing, ippCmpGreater ); // clip positive 00563 } 00564 00565 // add the output from the preprocessor (=effect) 00566 V_ADD ( nSamples*m_nClass*m_nDomain, output, effect, output ); 00567 00568 // calc output labels (for classification dataset) 00569 if ( Framework::getDatasetType() ) 00570 { 00571 // in all domains 00572 for ( int d=0;d<m_nDomain;d++ ) 00573 { 00574 // calc output labels 00575 for ( int i=0;i<nSamples;i++ ) 00576 { 00577 // find max. output value 00578 int indMax = -1; 00579 REAL max = -1e10; 00580 for ( int j=0;j<m_nClass;j++ ) 00581 { 00582 if ( max < output[d*m_nClass + i*m_nDomain*m_nClass + j] ) 00583 { 00584 max = output[d*m_nClass + i*m_nDomain*m_nClass + j]; 00585 indMax = j; 00586 } 00587 } 00588 labels[d+i*m_nDomain] = indMax; 00589 } 00590 } 00591 } 00592 00593 // clip final outputs 00594 if ( m_enableClipping ) 00595 { 00596 IPPS_THRESHOLD ( output, output, nSamples*m_nClass*m_nDomain, m_negativeTarget, ippCmpLess ); // clip negative 00597 IPPS_THRESHOLD ( output, output, nSamples*m_nClass*m_nDomain, m_positiveTarget, ippCmpGreater ); // clip positive 00598 } 00599 00600 // add small noise 00601 if ( m_addOutputNoise > 0.0 ) 00602 for ( int i=0;i<nSamples*m_nClass*m_nDomain;i++ ) 00603 output[i] += NumericalTools::getNormRandomNumber ( 0.0, m_addOutputNoise ); 00604 00605 }

| void StandardAlgorithm::readMaps | ( | ) | [protected] |

Read the standard values from the description file

Definition at line 165 of file StandardAlgorithm.cpp.

00166 { 00167 cout<<"Read dsc maps (standard values)"<<endl; 00168 m_minTuninigEpochs = m_intMap["minTuninigEpochs"]; 00169 m_maxTuninigEpochs = m_intMap["maxTuninigEpochs"]; 00170 m_initMaxSwing = m_doubleMap["initMaxSwing"]; 00171 m_enableClipping = m_boolMap["enableClipping"]; 00172 m_enableTuneSwing = m_boolMap["enableTuneSwing"]; 00173 m_minimzeProbe = m_boolMap["minimzeProbe"]; 00174 m_minimzeProbeClassificationError = m_boolMap["minimzeProbeClassificationError"]; 00175 m_minimzeBlend = m_boolMap["minimzeBlend"]; 00176 m_minimzeBlendClassificationError = m_boolMap["minimzeBlendClassificationError"]; 00177 m_weightFile = m_stringMap["weightFile"]; 00178 m_fullPrediction = m_stringMap["fullPrediction"]; 00179 }

| void StandardAlgorithm::saveBestPrediction | ( | ) | [virtual] |

Save the best training prediction

Implements AutomaticParameterTuner.

Definition at line 505 of file StandardAlgorithm.cpp.

00506 { 00507 cout<<"[saveBest]"; 00508 memcpy ( m_predictionBest, m_prediction, sizeof ( REAL ) * (m_validationType == "ValidationSet" ? m_validSize : m_nTrain) * m_nClass * m_nDomain ); 00509 m_blendStop->saveTmpBestWeights(); 00510 if(m_validationType == "ValidationSet") 00511 saveWeights(0); 00512 else 00513 { 00514 if ( m_validationType == "CrossFoldMean" || m_validationType == "Bagging" ) 00515 for ( int i=0;i<m_nCross;i++ ) 00516 saveWeights ( i ); 00517 } 00518 }

| void StandardAlgorithm::setPredictionMode | ( | int | cross | ) | [virtual] |

Set the prediction mode, make the model ready to predict unknown test samples

Implements Algorithm.

Definition at line 537 of file StandardAlgorithm.cpp.

00538 { 00539 cout<<"Set algorithm in prediction mode"<<endl; 00540 readMaps(); 00541 readSpecificMaps(); 00542 loadWeights ( cross ); 00543 }

| double StandardAlgorithm::train | ( | ) | [virtual] |

Standard training

- Returns:

- The lowest cross-fold validation error

Implements Algorithm.

Definition at line 90 of file StandardAlgorithm.cpp.

00091 { 00092 cout<<"Start train StandardAlgorithm"<<endl; 00093 00094 init(); 00095 00096 modelInit(); 00097 00098 double rmse = m_blendStop->calcBlending(); 00099 cout<<endl<<"ERR Blend:"<<rmse<<endl; 00100 00101 cout<<endl<<"============================ START TRAIN (param tuning) ============================="<<endl<<endl; 00102 cout<<"Parameters to tune:"<<endl; 00103 00104 // automatically tune parameters 00105 m_maxSwing = m_initMaxSwing; 00106 for ( int i=0;i<paramEpochValues.size();i++ ) 00107 { 00108 addEpochParameter ( paramEpochValues[i], paramEpochNames[i] ); 00109 cout<<"[EPOCH] name:"<<paramEpochNames[i]<<" initValue:"<<*paramEpochValues[i]<<endl; 00110 } 00111 for ( int i=0;i<paramDoubleValues.size();i++ ) 00112 { 00113 addDoubleParameter ( paramDoubleValues[i], paramDoubleNames[i] ); 00114 cout<<"[REAL] name:"<<paramDoubleNames[i]<<" initValue:"<<*paramDoubleValues[i]<<endl; 00115 } 00116 for ( int i=0;i<paramIntValues.size();i++ ) 00117 { 00118 addIntegerParameter ( paramIntValues[i], paramIntNames[i] ); 00119 cout<<"[INT] name:"<<paramIntNames[i]<<" initValue:"<<*paramIntValues[i]<<endl; 00120 } 00121 if ( m_enableTuneSwing ) 00122 { 00123 addDoubleParameter ( &m_maxSwing, "swing" ); 00124 cout<<"[REAL] name:"<<"swing"<<" initValue:"<<m_maxSwing<<endl; 00125 } 00126 00127 // when in multiple optimization loop 00128 if ( m_loadWeightsBeforeTraining ) 00129 loadMetaWeights ( m_nCross ); 00130 00131 // start the structured searcher 00132 cout<<"(min|max. epochs: "<<m_minTuninigEpochs<<"|"<<m_maxTuninigEpochs<<")"<<endl; 00133 expSearcher ( m_minTuninigEpochs, m_maxTuninigEpochs, 3, 1, 0.8, m_minimzeProbe, m_minimzeBlend ); 00134 00135 // remove the parameters from the searchers 00136 for ( int i=0;i<paramEpochValues.size();i++ ) 00137 removeEpochParameter ( paramEpochNames[i] ); 00138 for ( int i=0;i<paramDoubleValues.size();i++ ) 00139 removeDoubleParameter ( paramDoubleNames[i] ); 00140 for ( int i=0;i<paramIntValues.size();i++ ) 00141 removeIntegerParameter ( paramIntNames[i] ); 00142 if ( m_enableTuneSwing ) 00143 removeDoubleParameter ( "swing" ); 00144 00145 paramEpochValues.clear(); 00146 paramEpochNames.clear(); 00147 paramDoubleValues.clear(); 00148 paramDoubleNames.clear(); 00149 paramIntValues.clear(); 00150 paramIntNames.clear(); 00151 00152 00153 cout<<endl<<"============================ END auto-optimize ============================="<<endl<<endl; 00154 00155 // calculate all train targets with cross validation 00156 calculateFullPrediction(); 00157 00158 return expSearchGetLowestError(); 00159 }

| void StandardAlgorithm::writeFullPrediction | ( | int | nSamples | ) | [protected] |

Write the training prediction (with n-fold cross validation)

Definition at line 611 of file StandardAlgorithm.cpp.

00612 { 00613 // calc train RMSE 00614 double rmse = 0.0, err; 00615 for ( int i=0;i<nSamples;i++ ) 00616 { 00617 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00618 { 00619 err = m_prediction[j+i*m_nClass*m_nDomain] - m_trainTargetOrig[j+i*m_nClass*m_nDomain]; 00620 rmse += err*err; 00621 } 00622 } 00623 00624 // write file 00625 string name = m_datasetPath + "/" + m_fullPredPath + "/" + m_fullPrediction; 00626 cout<<"Write full prediction: "<<name<<" (RMSE:"<<sqrt ( rmse/ ( double ) ( nSamples*m_nClass*m_nDomain ) ) <<")"; 00627 fstream f; 00628 f.open ( name.c_str(),ios::out ); 00629 f.write ( ( char* ) m_prediction, sizeof ( REAL ) *nSamples*m_nClass*m_nDomain ); 00630 f.close(); 00631 cout<<endl; 00632 }

The documentation for this class was generated from the following files:

1.5.8

1.5.8