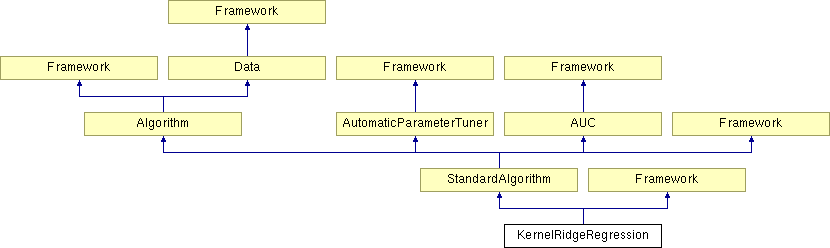

KernelRidgeRegression Class Reference

#include <KernelRidgeRegression.h>

Public Member Functions | |

| KernelRidgeRegression () | |

| ~KernelRidgeRegression () | |

| virtual void | modelInit () |

| virtual void | modelUpdate (REAL *input, REAL *target, uint nSamples, uint crossRun) |

| virtual void | predictAllOutputs (REAL *rawInputs, REAL *outputs, uint nSamples, uint crossRun) |

| virtual void | readSpecificMaps () |

| virtual void | saveWeights (int cross) |

| virtual void | loadWeights (int cross) |

| virtual void | loadMetaWeights (int cross) |

Static Public Member Functions | |

| static string | templateGenerator (int id, string preEffect, int nameID, bool blendStop) |

Private Attributes | |

| REAL ** | m_x |

| REAL * | m_trainMatrix |

| double | m_reg |

| double | m_polyScale |

| double | m_polyBiasPos |

| double | m_polyBiasNeg |

| double | m_polyPower |

| double | m_gaussSigma |

| double | m_tanhScale |

| double | m_tanhBiasPos |

| double | m_tanhBiasNeg |

| string | m_kernelType |

Detailed Description

Kernel ridge regression Regression in a high-dimensional feature space, defined by the kernelTunable parameters are the regularization constant and some kernel constants

Supported kernels:

- linear

- polynomial

Definition at line 19 of file KernelRidgeRegression.h.

Constructor & Destructor Documentation

| KernelRidgeRegression::KernelRidgeRegression | ( | ) |

Constructor

Definition at line 8 of file KernelRidgeRegression.cpp.

00009 { 00010 cout<<"KernelRidgeRegression"<<endl; 00011 // init member vars 00012 m_x = 0; 00013 m_reg = 0; 00014 m_trainMatrix = 0; 00015 }

| KernelRidgeRegression::~KernelRidgeRegression | ( | ) |

Destructor

Definition at line 20 of file KernelRidgeRegression.cpp.

00021 { 00022 cout<<"descructor KernelRidgeRegression"<<endl; 00023 for ( uint i=0;i<m_nCross+1;i++ ) 00024 { 00025 if ( m_x ) 00026 { 00027 if ( m_x[i] ) 00028 delete[] m_x[i]; 00029 m_x[i] = 0; 00030 } 00031 } 00032 if ( m_x ) 00033 delete[] m_x; 00034 m_x = 0; 00035 00036 if ( m_trainMatrix ) 00037 delete[] m_trainMatrix; 00038 m_trainMatrix = 0; 00039 }

Member Function Documentation

| void KernelRidgeRegression::loadWeights | ( | int | cross | ) | [virtual] |

Load the weights and all other parameters and make the model ready to predict

Implements StandardAlgorithm.

Definition at line 446 of file KernelRidgeRegression.cpp.

00447 { 00448 char buf[1024]; 00449 sprintf ( buf,"%02d",cross ); 00450 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile + "." + buf; 00451 cout<<"Load:"<<name<<endl; 00452 fstream f ( name.c_str(), ios::in ); 00453 if ( f.is_open() == false ) 00454 assert ( false ); 00455 f.read ( ( char* ) &m_nTrain, sizeof ( int ) ); 00456 f.read ( ( char* ) &m_nFeatures, sizeof ( int ) ); 00457 f.read ( ( char* ) &m_nClass, sizeof ( int ) ); 00458 f.read ( ( char* ) &m_nDomain, sizeof ( int ) ); 00459 00460 m_x = new REAL*[m_nCross+1]; 00461 for ( int i=0;i<m_nCross+1;i++ ) 00462 m_x[i] = 0; 00463 m_x[cross] = new REAL[m_nTrain * m_nClass * m_nDomain]; 00464 00465 m_trainMatrix = new REAL[m_nTrain*m_nFeatures]; 00466 00467 f.read ( ( char* ) m_x[cross], sizeof ( REAL ) *m_nTrain*m_nClass*m_nDomain ); 00468 f.read ( ( char* ) m_trainMatrix, sizeof ( REAL ) *m_nTrain*m_nFeatures ); 00469 f.read ( ( char* ) &m_maxSwing, sizeof ( double ) ); 00470 f.read ( ( char* ) &m_reg, sizeof ( double ) ); 00471 f.read ( ( char* ) &m_polyScale, sizeof ( double ) ); 00472 f.read ( ( char* ) &m_polyBiasPos, sizeof ( double ) ); 00473 f.read ( ( char* ) &m_polyBiasNeg, sizeof ( double ) ); 00474 f.read ( ( char* ) &m_polyPower, sizeof ( double ) ); 00475 f.read ( ( char* ) &m_gaussSigma, sizeof ( double ) ); 00476 f.read ( ( char* ) &m_tanhScale, sizeof ( double ) ); 00477 f.read ( ( char* ) &m_tanhBiasPos, sizeof ( double ) ); 00478 f.read ( ( char* ) &m_tanhBiasNeg, sizeof ( double ) ); 00479 f.close(); 00480 }

| void KernelRidgeRegression::modelInit | ( | ) | [virtual] |

Init the Linear Model

Implements StandardAlgorithm.

Definition at line 66 of file KernelRidgeRegression.cpp.

00067 { 00068 // add the tunable parameter 00069 paramDoubleValues.push_back ( &m_reg ); 00070 paramDoubleNames.push_back ( "reg" ); 00071 00072 if ( m_kernelType == "Poly" ) 00073 { 00074 paramDoubleValues.push_back ( &m_polyScale ); 00075 paramDoubleNames.push_back ( "polyScale" ); 00076 if ( m_polyBiasPos != 0.0 ) 00077 { 00078 paramDoubleValues.push_back ( &m_polyBiasPos ); 00079 paramDoubleNames.push_back ( "polyBiasPos" ); 00080 } 00081 if ( m_polyBiasNeg != 0.0 ) 00082 { 00083 paramDoubleValues.push_back ( &m_polyBiasNeg ); 00084 paramDoubleNames.push_back ( "polyBiasNeg" ); 00085 } 00086 paramDoubleValues.push_back ( &m_polyPower ); 00087 paramDoubleNames.push_back ( "polyPower" ); 00088 } 00089 00090 if ( m_kernelType == "Gauss" ) 00091 { 00092 paramDoubleValues.push_back ( &m_gaussSigma ); 00093 paramDoubleNames.push_back ( "gaussSigma" ); 00094 } 00095 00096 if ( m_kernelType == "Tanh" ) 00097 { 00098 paramDoubleValues.push_back ( &m_tanhScale ); 00099 paramDoubleNames.push_back ( "tanhScale" ); 00100 if ( m_tanhBiasPos != 0.0 ) 00101 { 00102 paramDoubleValues.push_back ( &m_tanhBiasPos ); 00103 paramDoubleNames.push_back ( "tanhBiasPos" ); 00104 } 00105 if ( m_tanhBiasNeg != 0.0 ) 00106 { 00107 paramDoubleValues.push_back ( &m_tanhBiasNeg ); 00108 paramDoubleNames.push_back ( "tanhBiasNeg" ); 00109 } 00110 } 00111 00112 // alloc mem for weights 00113 if ( m_x == 0 ) 00114 { 00115 m_x = new REAL*[m_nCross+1]; 00116 for ( uint i=0;i<m_nCross+1;i++ ) 00117 m_x[i] = new REAL[m_nTrain * m_nClass * m_nDomain]; 00118 } 00119 }

| void KernelRidgeRegression::modelUpdate | ( | REAL * | input, | |

| REAL * | target, | |||

| uint | nSamples, | |||

| uint | crossRun | |||

| ) | [virtual] |

Make a model update, set the new cross validation set or set the whole training set for retraining

- Parameters:

-

input Pointer to input (can be cross validation set, or whole training set) (rows x nFeatures) target The targets (can be cross validation targets) nSamples The sample size (rows) in input crossRun The cross validation run (for training)

Implements StandardAlgorithm.

Definition at line 269 of file KernelRidgeRegression.cpp.

00270 { 00271 // gram matrix 00272 REAL* K = new REAL[nSamples*nSamples]; 00273 REAL* y = new REAL[nSamples*m_nClass*m_nDomain]; 00274 00275 int lda = nSamples, nrhs = m_nClass*m_nDomain, ldb = nSamples, info = -1, maxStabilizeEpochs = 3, stabilizeEpoch = 0; 00276 00277 // do Cholesky factorization until it becomes stable 00278 while ( info != 0 && stabilizeEpoch < maxStabilizeEpochs ) 00279 { 00280 if ( m_kernelType == "Linear" ) 00281 { 00282 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, nSamples, nSamples, m_nFeatures, 1.0, input, m_nFeatures, input, m_nFeatures, 0.0, K, nSamples ); 00283 } 00284 else if ( m_kernelType == "Poly" ) 00285 { 00286 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, nSamples, nSamples, m_nFeatures, 1.0, input, m_nFeatures, input, m_nFeatures, 0.0, K, nSamples ); 00287 00288 V_MULCI ( m_polyScale, K, nSamples*nSamples ); 00289 V_ADDCI ( m_polyBiasPos - m_polyBiasNeg, K, nSamples*nSamples ); 00290 00291 // slower, but memory efficient 00292 for ( uint i=0;i<nSamples*nSamples;i++ ) 00293 { 00294 if ( K[i]>=0.0 ) 00295 K[i] = pow ( K[i], m_polyPower ); 00296 else 00297 K[i] = -pow ( -K[i], m_polyPower ); 00298 } 00299 } 00300 else if ( m_kernelType == "Gauss" ) 00301 { 00302 // this code is slower, but correct 00303 /*for(uint i=0;i<nSamples;i++) 00304 { 00305 REAL *ptr0 = input + i*m_nFeatures; 00306 for(uint j=0;j<nSamples;j++) 00307 { 00308 REAL *ptr1 = input + j*m_nFeatures; 00309 00310 double sum = 0.0; 00311 for(uint k=0;k<m_nFeatures;k++) 00312 sum += (ptr0[k] - ptr1[k]) * (ptr0[k] - ptr1[k]); 00313 00314 K[i*nSamples+j] = exp(-sum/(m_gaussSigma*m_gaussSigma)); 00315 } 00316 }*/ 00317 // speedup calculation by using BLAS in x'y: ||x-y||^2 = sum(x.^2) - 2 * x'y + sum(y.^2) 00318 REAL* squaredSum = new REAL[nSamples]; 00319 for ( uint i=0;i<nSamples;i++ ) 00320 { 00321 double sum = 0.0; 00322 REAL *ptr = input + i*m_nFeatures; 00323 for ( uint k=0;k<m_nFeatures;k++ ) 00324 sum += ptr[k]*ptr[k]; 00325 squaredSum[i] = sum; 00326 } 00327 00328 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, nSamples, nSamples, m_nFeatures, 1.0, input, m_nFeatures, input, m_nFeatures, 0.0, K, nSamples ); 00329 00330 for ( uint i=0;i<nSamples;i++ ) 00331 { 00332 REAL *ptr = K + i*nSamples, sqSum = squaredSum[i]; 00333 for ( uint j=0;j<nSamples;j++ ) 00334 ptr[j] = sqSum + squaredSum[j] - 2.0 * ptr[j]; // sum = sum_k (ptr0[k] - ptr1[k]) * (ptr0[k] - ptr1[k]) 00335 } 00336 00337 REAL c0 = - 1.0 / ( m_gaussSigma * m_gaussSigma ); 00338 for ( uint i=0;i<nSamples*nSamples;i++ ) 00339 K[i] *= c0; 00340 00341 // block-wise apply of the exp-function 00342 uint blockSize = 10000000; 00343 assert ( nSamples*nSamples < 4200000000 ); 00344 for ( uint i=0;i<nSamples*nSamples && i<4200000000;i+=blockSize ) 00345 { 00346 int nElem = nSamples*nSamples - i; 00347 if ( nSamples*nSamples - i > blockSize ) 00348 nElem = blockSize; 00349 V_EXP ( K+i, nElem ); 00350 } 00351 00352 delete[] squaredSum; 00353 } 00354 else if ( m_kernelType == "Tanh" ) 00355 { 00356 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, nSamples, nSamples, m_nFeatures, 1.0, input, m_nFeatures, input, m_nFeatures, 0.0, K, nSamples ); 00357 00358 V_MULCI ( m_tanhScale, K, nSamples*nSamples ); 00359 V_ADDCI ( m_tanhBiasPos - m_tanhBiasNeg, K, nSamples*nSamples ); 00360 V_TANH ( nSamples*nSamples, K, K ); 00361 } 00362 else 00363 assert ( false ); 00364 00365 for ( uint i=0;i<nSamples;i++ ) 00366 K[i+i*nSamples] += ( REAL ) nSamples * m_reg; 00367 00368 // copy targets to a tmp matrix 00369 for ( uint i=0;i<nrhs;i++ ) 00370 for ( uint j=0;j<nSamples;j++ ) 00371 y[j+i*nSamples] = target[i+j*nrhs]; // copy to colMajor 00372 00373 //Cholesky factorization of a symmetric (Hermitian) positive-definite matrix 00374 LAPACK_POTRF ( "U", &lda, K, &lda, &info ); 00375 00376 // no stabilizing in cross-fold training 00377 if ( crossRun != m_nCross ) 00378 info = 0; 00379 00380 if ( info != 0 ) 00381 { 00382 m_reg *= 1.1; 00383 cout<<"[retraining] Stabilize reg:"<<m_reg<<" (info:"<<info<<")"<<endl; 00384 stabilizeEpoch++; 00385 } 00386 } 00387 00388 // Solves a system of linear equations with a Cholesky-factored symmetric (Hermitian) positive-definite matrix 00389 LAPACK_POTRS ( "U", &lda, &nrhs, K, &lda, y, &ldb, &info ); // Y=solution vector 00390 00391 if ( info != 0 ) 00392 cout<<"error: equation solver failed to converge: "<<info<<endl; 00393 00394 for ( uint i=0;i<nSamples;i++ ) 00395 for ( uint j=0;j<m_nClass*m_nDomain;j++ ) 00396 m_x[crossRun][j+i*m_nClass*m_nDomain] = y[i+j*nSamples]; // copy to rowMajor 00397 00398 delete[] y; 00399 delete[] K; 00400 }

| void KernelRidgeRegression::predictAllOutputs | ( | REAL * | rawInputs, | |

| REAL * | outputs, | |||

| uint | nSamples, | |||

| uint | crossRun | |||

| ) | [virtual] |

Prediction for outside use, predicts outputs based on raw input values

- Parameters:

-

rawInputs The input feature, without normalization (raw) outputs The output value (prediction of target) nSamples The input size (number of rows) crossRun Number of cross validation run (in training)

Implements StandardAlgorithm.

Definition at line 129 of file KernelRidgeRegression.cpp.

00130 { 00131 REAL *K, *A; 00132 uint m, n = m_nFeatures; 00133 00134 // predict mode 00135 if ( Framework::getFrameworkMode() == 1 ) 00136 { 00137 A = m_trainMatrix; 00138 K = new REAL[nSamples*m_nTrain]; 00139 m = m_nTrain; 00140 } 00141 else // train mode 00142 { 00143 A = m_validationType=="ValidationSet" ? m_trainOrig : m_train[crossRun]; 00144 K = new REAL[nSamples*m_trainSize[crossRun]]; 00145 m = m_trainSize[crossRun]; 00146 } 00147 00148 if ( m_kernelType == "Linear" ) 00149 { 00150 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, nSamples, m, n, 1.0, rawInputs, n, A, n, 0.0, K, m ); // K = rawInputs*A' 00151 } 00152 else if ( m_kernelType == "Poly" ) 00153 { 00154 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, nSamples, m, n, 1.0, rawInputs, n, A, n, 0.0, K, m ); // K = rawInputs*A' 00155 00156 V_MULCI ( m_polyScale, K, m*nSamples ); 00157 V_ADDCI ( m_polyBiasPos - m_polyBiasNeg, K, m*nSamples ); 00158 00159 for ( uint i=0;i<m*nSamples;i++ ) 00160 { 00161 if ( K[i]>=0.0 ) 00162 K[i] = pow ( K[i], m_polyPower ); 00163 else 00164 K[i] = -pow ( -K[i], m_polyPower ); 00165 } 00166 } 00167 else if ( m_kernelType == "Gauss" ) 00168 { 00169 // this code is slower, but correct 00170 /*for(uint i=0;i<nSamples;i++) 00171 { 00172 REAL *ptr0 = rawInputs + i*m_nFeatures; 00173 for(uint j=0;j<m;j++) 00174 { 00175 REAL *ptr1 = A + j*m_nFeatures; 00176 00177 double sum = 0.0; 00178 for(uint k=0;k<m_nFeatures;k++) 00179 sum += (ptr0[k] - ptr1[k]) * (ptr0[k] - ptr1[k]); 00180 00181 K[i*m+j] = exp(-sum/(m_gaussSigma*m_gaussSigma)); 00182 } 00183 }*/ 00184 // speedup calculation by using BLAS in x'y: ||x-y||^2 = sum(x.^2) - 2 * x'y + sum(y.^2) 00185 REAL* squaredSumInputs = new REAL[nSamples]; 00186 REAL* squaredSumData = new REAL[m]; 00187 for ( uint i=0;i<nSamples;i++ ) 00188 { 00189 double sum = 0.0; 00190 REAL *ptr = rawInputs + i*m_nFeatures; 00191 for ( uint k=0;k<m_nFeatures;k++ ) 00192 sum += ptr[k]*ptr[k]; 00193 squaredSumInputs[i] = sum; // input feature squared sum 00194 } 00195 for ( uint i=0;i<m;i++ ) 00196 { 00197 double sum = 0.0; 00198 REAL *ptr = A + i*m_nFeatures; 00199 for ( uint k=0;k<m_nFeatures;k++ ) 00200 sum += ptr[k]*ptr[k]; 00201 squaredSumData[i] = sum; // data feature squared sun 00202 } 00203 00204 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, nSamples, m, n, 1.0, rawInputs, n, A, n, 0.0, K, m ); // K = rawInputs*A' 00205 00206 for ( uint i=0;i<nSamples;i++ ) 00207 { 00208 REAL* ptr = K + i*m, sqSumIn = squaredSumInputs[i]; 00209 for ( uint j=0;j<m;j++ ) 00210 ptr[j] = squaredSumData[j] + sqSumIn - 2.0 * ptr[j]; 00211 } 00212 00213 REAL c0 = - 1.0 / ( m_gaussSigma * m_gaussSigma ); 00214 for ( uint i=0;i<m*nSamples;i++ ) 00215 K[i] *= c0; 00216 00217 // block-wise apply of the exp-function (due to limits in the V_EXP) 00218 uint blockSize = 10000000; 00219 assert ( m*nSamples < 4200000000 ); 00220 for ( uint i=0;i<m*nSamples && i<4200000000;i+=blockSize ) 00221 { 00222 int nElem = m*nSamples - i; 00223 if ( m*nSamples - i > blockSize ) 00224 nElem = blockSize; 00225 V_EXP ( K+i, nElem ); 00226 } 00227 00228 delete[] squaredSumInputs; 00229 delete[] squaredSumData; 00230 } 00231 else if ( m_kernelType == "Tanh" ) 00232 { 00233 CBLAS_GEMM ( CblasRowMajor, CblasNoTrans, CblasTrans, nSamples, m, n, 1.0, rawInputs, n, A, n, 0.0, K, m ); // K = rawInputs*A' 00234 V_MULCI ( m_tanhScale, K, m*nSamples ); 00235 V_ADDCI ( m_tanhBiasPos - m_tanhBiasNeg, K, m*nSamples ); 00236 //for(int i=0;i<m*nSamples;i++) 00237 // cout<<K[i]<<" "<<flush; 00238 V_TANH ( m*nSamples, K, K ); 00239 } 00240 else 00241 assert ( false ); 00242 00243 for ( uint i=0;i<nSamples;i++ ) 00244 { 00245 for ( uint j=0;j<m_nClass*m_nDomain;j++ ) 00246 { 00247 double sum = 0.0; 00248 REAL* ptr = K + i*m; 00249 for ( uint k=0;k<m;k++ ) 00250 sum += ptr[k] * m_x[crossRun][k*m_nClass*m_nDomain + j]; 00251 if ( isnan ( sum ) || isinf ( sum ) ) 00252 sum = 0.0; 00253 outputs[i*m_nClass*m_nDomain + j] = sum; 00254 } 00255 } 00256 00257 delete[] K; 00258 }

| void KernelRidgeRegression::readSpecificMaps | ( | ) | [virtual] |

Read the Algorithm specific values from the description file

Implements StandardAlgorithm.

Definition at line 45 of file KernelRidgeRegression.cpp.

00046 { 00047 m_reg = m_doubleMap["initReg"]; 00048 m_kernelType = m_stringMap["kernelType"]; 00049 00050 m_polyScale = m_doubleMap["polyScaleInit"]; 00051 m_polyBiasPos = m_doubleMap["polyBiasPosInit"]; 00052 m_polyBiasNeg = m_doubleMap["polyBiasNegInit"]; 00053 m_polyPower = m_doubleMap["polyPowerInit"]; 00054 00055 m_gaussSigma = m_doubleMap["gaussSigmaInit"]; 00056 00057 m_tanhScale = m_doubleMap["tanhScaleInit"]; 00058 m_tanhBiasPos = m_doubleMap["tanhBiasPosInit"]; 00059 m_tanhBiasNeg = m_doubleMap["tanhBiasNegInit"]; 00060 }

| void KernelRidgeRegression::saveWeights | ( | int | cross | ) | [virtual] |

Save the weights and all other parameters for load the complete prediction model

Implements StandardAlgorithm.

Definition at line 406 of file KernelRidgeRegression.cpp.

00407 { 00408 char buf[1024]; 00409 sprintf ( buf,"%02d",cross ); 00410 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile + "." + buf; 00411 if ( m_inRetraining ) 00412 cout<<"Save:"<<name<<endl; 00413 fstream f ( name.c_str(), ios::out ); 00414 f.write ( ( char* ) &m_nTrain, sizeof ( int ) ); 00415 f.write ( ( char* ) &m_nFeatures, sizeof ( int ) ); 00416 f.write ( ( char* ) &m_nClass, sizeof ( int ) ); 00417 f.write ( ( char* ) &m_nDomain, sizeof ( int ) ); 00418 f.write ( ( char* ) m_x[cross], sizeof ( REAL ) *m_nTrain*m_nClass*m_nDomain ); 00419 if(m_validationType == "ValidationSet") 00420 f.write ( ( char* ) m_trainOrig, sizeof ( REAL ) *m_nTrain*m_nFeatures ); 00421 else 00422 { 00423 if(m_enableSaveMemory && m_train[cross]==0) 00424 fillNCrossValidationSet (cross); 00425 f.write ( ( char* ) m_train[cross], sizeof ( REAL ) *m_nTrain*m_nFeatures ); 00426 if ( m_enableSaveMemory && m_train[cross]==0) 00427 freeNCrossValidationSet (cross); 00428 } 00429 f.write ( ( char* ) &m_maxSwing, sizeof ( double ) ); 00430 f.write ( ( char* ) &m_reg, sizeof ( double ) ); 00431 f.write ( ( char* ) &m_polyScale, sizeof ( double ) ); 00432 f.write ( ( char* ) &m_polyBiasPos, sizeof ( double ) ); 00433 f.write ( ( char* ) &m_polyBiasNeg, sizeof ( double ) ); 00434 f.write ( ( char* ) &m_polyPower, sizeof ( double ) ); 00435 f.write ( ( char* ) &m_gaussSigma, sizeof ( double ) ); 00436 f.write ( ( char* ) &m_tanhScale, sizeof ( double ) ); 00437 f.write ( ( char* ) &m_tanhBiasPos, sizeof ( double ) ); 00438 f.write ( ( char* ) &m_tanhBiasNeg, sizeof ( double ) ); 00439 f.close(); 00440 }

| string KernelRidgeRegression::templateGenerator | ( | int | id, | |

| string | preEffect, | |||

| int | nameID, | |||

| bool | blendStop | |||

| ) | [static] |

Generates a template of the description file

- Returns:

- The template string

Definition at line 522 of file KernelRidgeRegression.cpp.

00523 { 00524 stringstream s; 00525 s<<"ALGORITHM=KernelRidgeRegression"<<endl; 00526 s<<"ID="<<id<<endl; 00527 s<<"TRAIN_ON_FULLPREDICTOR="<<preEffect<<endl; 00528 s<<"DISABLE=0"<<endl; 00529 s<<endl; 00530 s<<"[int]"<<endl; 00531 s<<"maxTuninigEpochs=20"<<endl; 00532 s<<endl; 00533 s<<"[double]"<<endl; 00534 s<<"initMaxSwing=1.0"<<endl; 00535 s<<"initReg=0.1"<<endl; 00536 s<<"polyScaleInit=1.0"<<endl; 00537 s<<"polyBiasPosInit=10.0"<<endl; 00538 s<<"polyBiasNegInit=0.01"<<endl; 00539 s<<"polyPowerInit=6.0"<<endl; 00540 s<<"gaussSigmaInit=5.0"<<endl; 00541 s<<"tanhScaleInit=1.0"<<endl; 00542 s<<"tanhBiasPosInit=0.1"<<endl; 00543 s<<"tanhBiasNegInit=0.1"<<endl; 00544 s<<"[bool]"<<endl; 00545 s<<"enableClipping=1"<<endl; 00546 s<<"enableTuneSwing=0"<<endl; 00547 s<<endl; 00548 s<<"minimzeProbe="<< ( !blendStop ) <<endl; 00549 s<<"minimzeProbeClassificationError=0"<<endl; 00550 s<<"minimzeBlend="<<blendStop<<endl; 00551 s<<"minimzeBlendClassificationError=0"<<endl; 00552 s<<endl; 00553 s<<"[string]"<<endl; 00554 s<<"kernelType=Gauss"<<endl; 00555 s<<"weightFile="<<"KernelRidgeRegression_"<<nameID<<"_weights.dat"<<endl; 00556 s<<"fullPrediction=KernelRidgeRegression_"<<nameID<<".dat"<<endl; 00557 00558 return s.str(); 00559 }

The documentation for this class was generated from the following files:

1.5.8

1.5.8