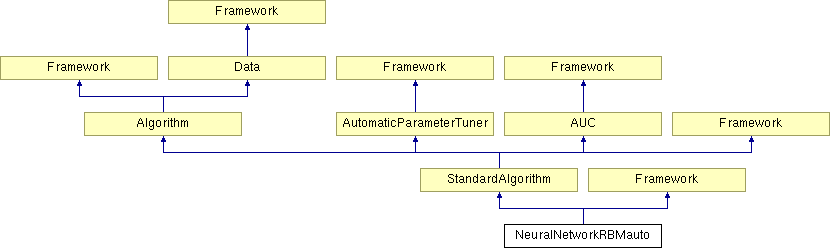

NeuralNetworkRBMauto Class Reference

#include <NeuralNetworkRBMauto.h>

Public Member Functions | |

| NeuralNetworkRBMauto () | |

| ~NeuralNetworkRBMauto () | |

| virtual void | modelInit () |

| virtual void | modelUpdate (REAL *input, REAL *target, uint nSamples, uint crossRun) |

| virtual void | predictAllOutputs (REAL *rawInputs, REAL *outputs, uint nSamples, uint crossRun) |

| virtual void | readSpecificMaps () |

| virtual void | saveWeights (int cross) |

| virtual void | loadWeights (int cross) |

| virtual void | loadMetaWeights (int cross) |

Static Public Member Functions | |

| static string | templateGenerator (int id, string preEffect, int nameID, bool blendStop) |

Private Attributes | |

| REAL ** | m_inputs |

| NNRBM ** | m_nn |

| int | m_epoch |

| bool * | m_isFirstEpoch |

| int | m_nrLayerAuto |

| int | m_nrLayerNetHidden |

| int | m_batchSize |

| int | m_tuningEpochsRBMFinetuning |

| int | m_tuningEpochsOutputNet |

| double | m_offsetOutputs |

| double | m_scaleOutputs |

| double | m_initWeightFactor |

| double | m_learnrate |

| double | m_learnrateAutoencoderFinetuning |

| double | m_learnrateMinimum |

| double | m_learnrateSubtractionValueAfterEverySample |

| double | m_learnrateSubtractionValueAfterEveryEpoch |

| double | m_momentum |

| double | m_weightDecay |

| double | m_minUpdateErrorBound |

| double | m_etaPosRPROP |

| double | m_etaNegRPROP |

| double | m_minUpdateRPROP |

| double | m_maxUpdateRPROP |

| bool | m_enableL1Regularization |

| bool | m_enableErrorFunctionMAE |

| bool | m_enableRPROP |

| bool | m_useBLASforTraining |

| string | m_neuronsPerLayerAuto |

| string | m_neuronsPerLayerNetHidden |

Detailed Description

EXPERIMENTAL ALGORITHM !!NeuralNetwork with an autoencoder for real-valued prediction, autoencoder weights are pretrained by a RBM, RBM pretraining should give a good startpoint for the backprop optimization -> finetuning

The Algorithm makes use of a nnrbm class.

Definition at line 21 of file NeuralNetworkRBMauto.h.

Constructor & Destructor Documentation

| NeuralNetworkRBMauto::NeuralNetworkRBMauto | ( | ) |

Constructor

Definition at line 8 of file NeuralNetworkRBMauto.cpp.

00009 { 00010 cout<<"NeuralNetworkRBMauto"<<endl; 00011 // init member vars 00012 m_inputs = 0; 00013 m_nn = 0; 00014 m_epoch = 0; 00015 m_nrLayerAuto = 0; 00016 m_nrLayerNetHidden = 0; 00017 m_batchSize = 0; 00018 m_offsetOutputs = 0; 00019 m_scaleOutputs = 0; 00020 m_initWeightFactor = 0; 00021 m_learnrate = 0; 00022 m_learnrateMinimum = 0; 00023 m_learnrateSubtractionValueAfterEverySample = 0; 00024 m_learnrateSubtractionValueAfterEveryEpoch = 0; 00025 m_momentum = 0; 00026 m_weightDecay = 0; 00027 m_minUpdateErrorBound = 0; 00028 m_etaPosRPROP = 0; 00029 m_etaNegRPROP = 0; 00030 m_minUpdateRPROP = 0; 00031 m_maxUpdateRPROP = 0; 00032 m_enableRPROP = 0; 00033 m_useBLASforTraining = 0; 00034 m_enableL1Regularization = 0; 00035 m_enableErrorFunctionMAE = 0; 00036 m_isFirstEpoch = 0; 00037 m_tuningEpochsRBMFinetuning = 0; 00038 m_tuningEpochsOutputNet = 0; 00039 m_learnrateAutoencoderFinetuning = 0; 00040 }

| NeuralNetworkRBMauto::~NeuralNetworkRBMauto | ( | ) |

Destructor

Definition at line 45 of file NeuralNetworkRBMauto.cpp.

00046 { 00047 cout<<"descructor NeuralNetworkRBMauto"<<endl; 00048 00049 for ( int i=0;i<m_nCross+1;i++ ) 00050 { 00051 if ( m_nn[i] ) 00052 delete m_nn[i]; 00053 m_nn[i] = 0; 00054 } 00055 delete[] m_nn; 00056 00057 if ( m_isFirstEpoch ) 00058 delete[] m_isFirstEpoch; 00059 m_isFirstEpoch = 0; 00060 }

Member Function Documentation

| void NeuralNetworkRBMauto::loadWeights | ( | int | cross | ) | [virtual] |

Load the weights and all other parameters and make the model ready to predict

Implements StandardAlgorithm.

Definition at line 325 of file NeuralNetworkRBMauto.cpp.

00326 { 00327 // load weights 00328 char buf[1024]; 00329 sprintf ( buf,"%02d",cross ); 00330 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile + "." + buf; 00331 cout<<"Load:"<<name<<endl; 00332 fstream f ( name.c_str(), ios::in ); 00333 if ( f.is_open() == false ) 00334 assert ( false ); 00335 f.read ( ( char* ) &m_nTrain, sizeof ( int ) ); 00336 f.read ( ( char* ) &m_nFeatures, sizeof ( int ) ); 00337 f.read ( ( char* ) &m_nClass, sizeof ( int ) ); 00338 f.read ( ( char* ) &m_nDomain, sizeof ( int ) ); 00339 00340 // set up NNs (only the last one is used) 00341 m_nn = new NNRBM*[m_nCross+1]; 00342 for ( int i=0;i<m_nCross+1;i++ ) 00343 m_nn[i] = 0; 00344 m_nn[cross] = new NNRBM(); 00345 m_nn[cross]->setNrTargets ( m_nClass*m_nDomain ); 00346 m_nn[cross]->setNrInputs ( m_nFeatures ); 00347 m_nn[cross]->setNrExamplesTrain ( 0 ); 00348 m_nn[cross]->setNrExamplesProbe ( 0 ); 00349 m_nn[cross]->setTrainInputs ( 0 ); 00350 m_nn[cross]->setTrainTargets ( 0 ); 00351 m_nn[cross]->setProbeInputs ( 0 ); 00352 m_nn[cross]->setProbeTargets ( 0 ); 00353 00354 // learn parameters 00355 m_nn[cross]->setInitWeightFactor ( m_initWeightFactor ); 00356 m_nn[cross]->setLearnrate ( m_learnrate ); 00357 m_nn[cross]->setLearnrateMinimum ( m_learnrateMinimum ); 00358 m_nn[cross]->setLearnrateSubtractionValueAfterEverySample ( m_learnrateSubtractionValueAfterEverySample ); 00359 m_nn[cross]->setLearnrateSubtractionValueAfterEveryEpoch ( m_learnrateSubtractionValueAfterEveryEpoch ); 00360 m_nn[cross]->setMomentum ( m_momentum ); 00361 m_nn[cross]->setWeightDecay ( m_weightDecay ); 00362 m_nn[cross]->setMinUpdateErrorBound ( m_minUpdateErrorBound ); 00363 m_nn[cross]->setBatchSize ( m_batchSize ); 00364 m_nn[cross]->setMaxEpochs ( m_maxTuninigEpochs ); 00365 m_nn[cross]->setL1Regularization ( m_enableL1Regularization ); 00366 m_nn[cross]->enableErrorFunctionMAE ( m_enableErrorFunctionMAE ); 00367 00368 00369 // set net inner stucture 00370 int nrLayer = m_nrLayerAuto + m_nrLayerNetHidden; 00371 int* neuronsPerLayerAuto = Data::splitStringToIntegerList ( m_neuronsPerLayerAuto, ',' ); 00372 int* neuronsPerLayerNetHidden = Data::splitStringToIntegerList ( m_neuronsPerLayerNetHidden, ',' ); 00373 int* neurPerLayer = new int[nrLayer]; 00374 int* layerType = new int[nrLayer+1]; 00375 for ( int j=0;j<m_nrLayerAuto;j++ ) 00376 { 00377 neurPerLayer[j] = neuronsPerLayerAuto[j]; 00378 layerType[j] = 0; // sig 00379 } 00380 layerType[m_nrLayerAuto] = 0; // linear 00381 for ( int j=0;j<m_nrLayerNetHidden;j++ ) 00382 { 00383 neurPerLayer[j+m_nrLayerAuto] = neuronsPerLayerNetHidden[j]; 00384 layerType[j+m_nrLayerAuto+1] = 2; // tanh 00385 } 00386 layerType[m_nrLayerNetHidden+m_nrLayerAuto+1] = 2; // tanh 00387 00388 m_nn[cross]->enableRPROP ( m_enableRPROP ); 00389 m_nn[cross]->setNNStructure ( nrLayer+1, neurPerLayer, false, layerType ); 00390 00391 00392 m_nn[cross]->setRPROPPosNeg ( m_etaPosRPROP, m_etaNegRPROP ); 00393 m_nn[cross]->setRPROPMinMaxUpdate ( m_minUpdateRPROP, m_maxUpdateRPROP ); 00394 m_nn[cross]->setScaleOffset ( m_scaleOutputs, m_offsetOutputs ); 00395 m_nn[cross]->setNormalTrainStopping ( true ); 00396 m_nn[cross]->enableRPROP ( m_enableRPROP ); 00397 m_nn[cross]->useBLASforTraining ( m_useBLASforTraining ); 00398 m_nn[cross]->initNNWeights ( m_randSeed ); 00399 00400 delete[] neuronsPerLayerAuto; 00401 delete[] neuronsPerLayerNetHidden; 00402 delete[] neurPerLayer; 00403 delete[] layerType; 00404 00405 int n = 0; 00406 f.read ( ( char* ) &n, sizeof ( int ) ); 00407 00408 REAL* w = new REAL[n]; 00409 00410 f.read ( ( char* ) w, sizeof ( REAL ) *n ); 00411 f.read ( ( char* ) &m_maxSwing, sizeof ( double ) ); 00412 f.close(); 00413 00414 // init the NN weights 00415 m_nn[cross]->setWeights ( w ); 00416 00417 if ( w ) 00418 delete[] w; 00419 w = 0; 00420 }

| void NeuralNetworkRBMauto::modelInit | ( | ) | [virtual] |

Init the NN model

Implements StandardAlgorithm.

Definition at line 104 of file NeuralNetworkRBMauto.cpp.

00105 { 00106 // add tunable parameters 00107 m_epoch = 0; 00108 paramEpochValues.push_back ( &m_epoch ); 00109 paramEpochNames.push_back ( "epoch" ); 00110 00111 // set up NNs 00112 // nCross + 1 (for retraining) 00113 if ( m_nn == 0 ) 00114 { 00115 m_nn = new NNRBM*[m_nCross+1]; 00116 for ( int i=0;i<m_nCross+1;i++ ) 00117 m_nn[i] = 0; 00118 } 00119 for ( int i=0;i<m_nCross+1;i++ ) 00120 { 00121 cout<<"Create a Neural Network ("<<i+1<<"/"<<m_nCross+1<<")"<<endl; 00122 if ( m_nn[i] == 0 ) 00123 m_nn[i] = new NNRBM(); 00124 m_nn[i]->setNrTargets ( m_nClass*m_nDomain ); 00125 m_nn[i]->setNrInputs ( m_nFeatures ); 00126 m_nn[i]->setNrExamplesTrain ( 0 ); 00127 m_nn[i]->setNrExamplesProbe ( 0 ); 00128 m_nn[i]->setTrainInputs ( 0 ); 00129 m_nn[i]->setTrainTargets ( 0 ); 00130 m_nn[i]->setProbeInputs ( 0 ); 00131 m_nn[i]->setProbeTargets ( 0 ); 00132 m_nn[i]->setGlobalEpochs ( 0 ); 00133 00134 // learn parameters 00135 m_nn[i]->setInitWeightFactor ( m_initWeightFactor ); 00136 m_nn[i]->setLearnrate ( m_learnrate ); 00137 m_nn[i]->setLearnrateMinimum ( m_learnrateMinimum ); 00138 m_nn[i]->setLearnrateSubtractionValueAfterEverySample ( m_learnrateSubtractionValueAfterEverySample ); 00139 m_nn[i]->setLearnrateSubtractionValueAfterEveryEpoch ( m_learnrateSubtractionValueAfterEveryEpoch ); 00140 m_nn[i]->setMomentum ( m_momentum ); 00141 m_nn[i]->setWeightDecay ( m_weightDecay ); 00142 m_nn[i]->setMinUpdateErrorBound ( m_minUpdateErrorBound ); 00143 m_nn[i]->setBatchSize ( m_batchSize ); 00144 m_nn[i]->setMaxEpochs ( m_maxTuninigEpochs ); 00145 m_nn[i]->setL1Regularization ( m_enableL1Regularization ); 00146 m_nn[i]->enableErrorFunctionMAE ( m_enableErrorFunctionMAE ); 00147 00148 m_nn[i]->setRBMLearnParams ( m_doubleMap["rbmLearnrateWeights"], m_doubleMap["rbmLearnrateBiasVis"], m_doubleMap["rbmLearnrateBiasHid"], m_doubleMap["rbmWeightDecay"], m_doubleMap["rbmMaxEpochs"] ); 00149 00150 // set net inner stucture 00151 int nrLayer = m_nrLayerAuto + m_nrLayerNetHidden; 00152 int* neuronsPerLayerAuto = Data::splitStringToIntegerList ( m_neuronsPerLayerAuto, ',' ); 00153 int* neuronsPerLayerNetHidden = Data::splitStringToIntegerList ( m_neuronsPerLayerNetHidden, ',' ); 00154 int* neurPerLayer = new int[nrLayer+1]; 00155 int* layerType = new int[nrLayer+2]; 00156 for ( int j=0;j<m_nrLayerAuto;j++ ) 00157 { 00158 neurPerLayer[j] = neuronsPerLayerAuto[j]; 00159 layerType[j] = 0; // sig:0, linear:1, tanh:2, softmax:3 00160 } 00161 layerType[m_nrLayerAuto] = 1; // sig:0, linear:1, tanh:2, softmax:3 00162 for ( int j=0;j<m_nrLayerNetHidden;j++ ) 00163 { 00164 neurPerLayer[j+m_nrLayerAuto] = neuronsPerLayerNetHidden[j]; 00165 layerType[j+m_nrLayerAuto+1] = 2; // sig:0, linear:1, tanh:2, softmax:3 00166 } 00167 layerType[m_nrLayerNetHidden+m_nrLayerAuto+1] = 2; // sig:0, linear:1, tanh:2, softmax:3 00168 00169 m_nn[i]->enableRPROP ( m_enableRPROP ); 00170 m_nn[i]->setNNStructure ( nrLayer+1, neurPerLayer, false, layerType ); 00171 00172 m_nn[i]->setScaleOffset ( m_scaleOutputs, m_offsetOutputs ); 00173 m_nn[i]->setRPROPPosNeg ( m_etaPosRPROP, m_etaNegRPROP ); 00174 m_nn[i]->setRPROPMinMaxUpdate ( m_minUpdateRPROP, m_maxUpdateRPROP ); 00175 m_nn[i]->setNormalTrainStopping ( true ); 00176 m_nn[i]->useBLASforTraining ( m_useBLASforTraining ); 00177 m_nn[i]->initNNWeights ( m_randSeed ); 00178 00179 delete[] neuronsPerLayerAuto; 00180 delete[] neuronsPerLayerNetHidden; 00181 delete[] neurPerLayer; 00182 00183 cout<<endl<<endl; 00184 } 00185 00186 if ( m_isFirstEpoch == 0 ) 00187 m_isFirstEpoch = new bool[m_nCross+1]; 00188 for ( int i=0;i<m_nCross+1;i++ ) 00189 m_isFirstEpoch[i] = false; 00190 }

| void NeuralNetworkRBMauto::modelUpdate | ( | REAL * | input, | |

| REAL * | target, | |||

| uint | nSamples, | |||

| uint | crossRun | |||

| ) | [virtual] |

Make a model update, set the new cross validation set or set the whole training set for retraining

- Parameters:

-

input Pointer to input (can be cross validation set, or whole training set) (rows x nFeatures) target The targets (can be cross validation targets) nSamples The sample size (rows) in input crossRun The cross validation run (for training)

Implements StandardAlgorithm.

Definition at line 216 of file NeuralNetworkRBMauto.cpp.

00217 { 00218 m_nn[crossRun]->setTrainInputs ( input ); 00219 m_nn[crossRun]->setTrainTargets ( target ); 00220 m_nn[crossRun]->setNrExamplesTrain ( nSamples ); 00221 00222 if ( crossRun < m_nCross ) 00223 { 00224 if ( m_isFirstEpoch[crossRun] == false ) 00225 { 00226 m_isFirstEpoch[crossRun] = true; 00227 // Autoencoder: RBM pretraining + finetuning 00228 m_nn[crossRun]->rbmPretraining ( input, target, nSamples, m_nDomain*m_nClass, m_nrLayerAuto+1, crossRun, m_tuningEpochsRBMFinetuning, m_learnrateAutoencoderFinetuning ); 00229 } 00230 else 00231 { 00232 // one gradient descent step (one epoch) 00233 if ( m_epoch < m_tuningEpochsOutputNet ) 00234 //if(m_epoch > m_tuningEpochsOutputNet) 00235 //if(m_epoch%10 == 0) 00236 { 00237 bool* updateLayer = new bool[m_nrLayerAuto + m_nrLayerNetHidden + 3]; 00238 for ( int i=0;i<m_nrLayerAuto;i++ ) 00239 updateLayer[i] = false; // sigmoid 00240 updateLayer[m_nrLayerAuto] = false; // linear 00241 for ( int i=0;i<m_nrLayerNetHidden;i++ ) 00242 updateLayer[m_nrLayerAuto+1+i] = true; // tanh 00243 updateLayer[m_nrLayerAuto+m_nrLayerNetHidden+1] = true; // tanh 00244 00245 cout<<"[OutNet]"; 00246 m_nn[crossRun]->trainOneEpoch ( updateLayer ); 00247 delete[] updateLayer; 00248 } 00249 else 00250 { 00251 /*bool* updateLayer = new bool[m_nrLayerAuto + m_nrLayerNetHidden + 3]; 00252 for(int i=0;i<m_nrLayerAuto;i++) 00253 updateLayer[i] = true; // sigmoid 00254 updateLayer[m_nrLayerAuto] = true; // linear 00255 for(int i=0;i<m_nrLayerNetHidden;i++) 00256 updateLayer[m_nrLayerAuto+1+i] = false; // tanh 00257 updateLayer[m_nrLayerAuto+m_nrLayerNetHidden+1] = false; // tanh 00258 00259 cout<<"[In-Net]"; 00260 m_nn[crossRun]->trainOneEpoch(updateLayer); 00261 00262 delete[] updateLayer; 00263 */ 00264 m_nn[crossRun]->trainOneEpoch ( 0 ); 00265 } 00266 00267 if ( crossRun == m_nCross - 1 ) 00268 m_nn[crossRun]->printLearnrate(); 00269 } 00270 } 00271 else 00272 { 00273 cout<<endl<<"Tune: Training of full trainset "<<endl; 00274 00275 if ( m_isFirstEpoch[crossRun] == false ) 00276 { 00277 m_isFirstEpoch[crossRun] = true; 00278 // Autoencoder: RBM pretraining + finetuning 00279 m_nn[crossRun]->rbmPretraining ( input, target, nSamples, m_nDomain*m_nClass, m_nrLayerAuto+1, crossRun, m_tuningEpochsRBMFinetuning, m_learnrateAutoencoderFinetuning ); 00280 } 00281 00282 // retraining with fix number of epochs 00283 m_nn[crossRun]->setNormalTrainStopping ( false ); 00284 int maxEpochs = m_epochParamBest[0]; 00285 if ( maxEpochs == 0 ) 00286 maxEpochs = 1; // train at least one epoch 00287 cout<<"Best #epochs (on cross validation): "<<maxEpochs<<endl; 00288 m_nn[crossRun]->setMaxEpochs ( maxEpochs ); 00289 00290 // train the net 00291 int epochs = m_nn[crossRun]->trainNN(); 00292 cout<<endl; 00293 } 00294 }

| void NeuralNetworkRBMauto::predictAllOutputs | ( | REAL * | rawInputs, | |

| REAL * | outputs, | |||

| uint | nSamples, | |||

| uint | crossRun | |||

| ) | [virtual] |

Prediction for outside use, predicts outputs based on raw input values

- Parameters:

-

rawInputs The input feature, without normalization (raw) outputs The output value (prediction of target) nSamples The input size (number of rows) crossRun Number of cross validation run (in training)

Implements StandardAlgorithm.

Definition at line 200 of file NeuralNetworkRBMauto.cpp.

00201 { 00202 // predict all samples 00203 for ( int i=0;i<nSamples;i++ ) 00204 m_nn[crossRun]->predictSingleInput ( rawInputs + i*m_nFeatures, outputs + i*m_nClass*m_nDomain ); 00205 }

| void NeuralNetworkRBMauto::readSpecificMaps | ( | ) | [virtual] |

Read the Algorithm specific values from the description file

Implements StandardAlgorithm.

Definition at line 66 of file NeuralNetworkRBMauto.cpp.

00067 { 00068 cout<<"Read specific maps"<<endl; 00069 00070 // read dsc vars 00071 m_nrLayerAuto = m_intMap["nrLayerAuto"]; 00072 m_nrLayerNetHidden = m_intMap["nrLayerNetHidden"]; 00073 00074 m_batchSize = m_intMap["batchSize"]; 00075 m_tuningEpochsRBMFinetuning = m_intMap["tuningEpochsRBMFinetuning"]; 00076 m_tuningEpochsOutputNet = m_intMap["tuningEpochsOutputNet"]; 00077 m_offsetOutputs = m_doubleMap["offsetOutputs"]; 00078 m_scaleOutputs = m_doubleMap["scaleOutputs"]; 00079 m_initWeightFactor = m_doubleMap["initWeightFactor"]; 00080 m_learnrate = m_doubleMap["learnrate"]; 00081 m_learnrateAutoencoderFinetuning = m_doubleMap["learnrateAutoencoderFinetuning"]; 00082 m_learnrateMinimum = m_doubleMap["learnrateMinimum"]; 00083 m_learnrateSubtractionValueAfterEverySample = m_doubleMap["learnrateSubtractionValueAfterEverySample"]; 00084 m_learnrateSubtractionValueAfterEveryEpoch = m_doubleMap["learnrateSubtractionValueAfterEveryEpoch"]; 00085 m_momentum = m_doubleMap["momentum"]; 00086 m_weightDecay = m_doubleMap["weightDecay"]; 00087 m_minUpdateErrorBound = m_doubleMap["minUpdateErrorBound"]; 00088 m_etaPosRPROP = m_doubleMap["etaPosRPROP"]; 00089 m_etaNegRPROP = m_doubleMap["etaNegRPROP"]; 00090 m_minUpdateRPROP = m_doubleMap["minUpdateRPROP"]; 00091 m_maxUpdateRPROP = m_doubleMap["maxUpdateRPROP"]; 00092 m_enableL1Regularization = m_boolMap["enableL1Regularization"]; 00093 m_enableErrorFunctionMAE = m_boolMap["enableErrorFunctionMAE"]; 00094 m_enableRPROP = m_boolMap["enableRPROP"]; 00095 m_useBLASforTraining = m_boolMap["useBLASforTraining"]; 00096 m_neuronsPerLayerAuto = m_stringMap["neuronsPerLayerAuto"]; 00097 m_neuronsPerLayerNetHidden = m_stringMap["neuronsPerLayerNetHidden"]; 00098 }

| void NeuralNetworkRBMauto::saveWeights | ( | int | cross | ) | [virtual] |

Save the weights and all other parameters for load the complete prediction model

Implements StandardAlgorithm.

Definition at line 300 of file NeuralNetworkRBMauto.cpp.

00301 { 00302 char buf[1024]; 00303 sprintf ( buf,"%02d",cross ); 00304 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile + "." + buf; 00305 if ( m_inRetraining ) 00306 cout<<"Save:"<<name<<endl; 00307 int n = m_nn[cross]->getNrWeights(); 00308 REAL* w = m_nn[cross]->getWeightPtr(); 00309 00310 fstream f ( name.c_str(), ios::out ); 00311 f.write ( ( char* ) &m_nTrain, sizeof ( int ) ); 00312 f.write ( ( char* ) &m_nFeatures, sizeof ( int ) ); 00313 f.write ( ( char* ) &m_nClass, sizeof ( int ) ); 00314 f.write ( ( char* ) &m_nDomain, sizeof ( int ) ); 00315 f.write ( ( char* ) &n, sizeof ( int ) ); 00316 f.write ( ( char* ) w, sizeof ( REAL ) *n ); 00317 f.write ( ( char* ) &m_maxSwing, sizeof ( double ) ); 00318 f.close(); 00319 }

| string NeuralNetworkRBMauto::templateGenerator | ( | int | id, | |

| string | preEffect, | |||

| int | nameID, | |||

| bool | blendStop | |||

| ) | [static] |

Generates a template of the description file

- Returns:

- The template string

Definition at line 435 of file NeuralNetworkRBMauto.cpp.

00436 { 00437 stringstream s; 00438 s<<"ALGORITHM=NeuralNetworkRBMauto"<<endl; 00439 s<<"ID="<<id<<endl; 00440 s<<"TRAIN_ON_FULLPREDICTOR="<<preEffect<<endl; 00441 s<<"DISABLE=0"<<endl; 00442 s<<endl; 00443 s<<"[int]"<<endl; 00444 s<<"nrLayer=3"<<endl; 00445 s<<"batchSize=1"<<endl; 00446 s<<"minTuninigEpochs=30"<<endl; 00447 s<<"maxTuninigEpochs=100"<<endl; 00448 s<<endl; 00449 s<<"[double]"<<endl; 00450 s<<"initMaxSwing=1.0"<<endl; 00451 s<<endl; 00452 s<<"offsetOutputs=0.0"<<endl; 00453 s<<"scaleOutputs=1.2"<<endl; 00454 s<<endl; 00455 s<<"etaPosRPROP=1.005"<<endl; 00456 s<<"etaNegRPROP=0.99"<<endl; 00457 s<<"minUpdateRPROP=1e-8"<<endl; 00458 s<<"maxUpdateRPROP=1e-2"<<endl; 00459 s<<endl; 00460 s<<"initWeightFactor=1.0"<<endl; 00461 s<<"learnrate=1e-3"<<endl; 00462 s<<"learnrateMinimum=1e-5"<<endl; 00463 s<<"learnrateSubtractionValueAfterEverySample=0.0"<<endl; 00464 s<<"learnrateSubtractionValueAfterEveryEpoch=0.0"<<endl; 00465 s<<"momentum=0.0"<<endl; 00466 s<<"weightDecay=0.0"<<endl; 00467 s<<"minUpdateErrorBound=1e-6"<<endl; 00468 s<<endl; 00469 s<<"[bool]"<<endl; 00470 s<<"enableErrorFunctionMAE=0"<<endl; 00471 s<<"enableL1Regularization=0"<<endl; 00472 s<<"enableClipping=1"<<endl; 00473 s<<"enableTuneSwing=0"<<endl; 00474 s<<"useBLASforTraining=1"<<endl; 00475 s<<"enableRPROP=0"<<endl; 00476 s<<endl; 00477 s<<"minimzeProbe="<< ( !blendStop ) <<endl; 00478 s<<"minimzeProbeClassificationError=0"<<endl; 00479 s<<"minimzeBlend="<<blendStop<<endl; 00480 s<<"minimzeBlendClassificationError=0"<<endl; 00481 s<<endl; 00482 s<<"[string]"<<endl; 00483 s<<"neuronsPerLayer=30,20,40,30,100,-1"<<endl; 00484 s<<"weightFile=NeuralNetworkRBMauto_"<<nameID<<"_weights.dat"<<endl; 00485 s<<"fullPrediction=NeuralNetworkRBMauto_"<<nameID<<".dat"<<endl; 00486 00487 return s.str(); 00488 }

The documentation for this class was generated from the following files:

1.5.8

1.5.8