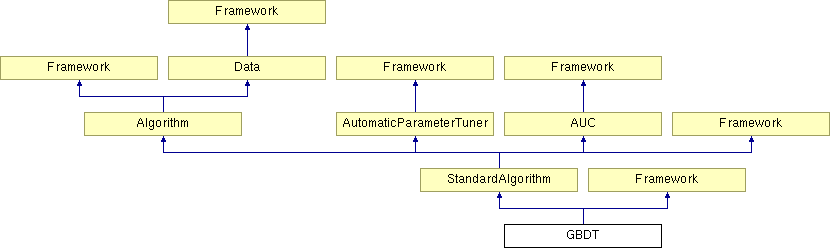

GBDT Class Reference

#include <GBDT.h>

Public Member Functions | |

| GBDT () | |

| ~GBDT () | |

| virtual void | modelInit () |

| virtual void | modelUpdate (REAL *input, REAL *target, uint nSamples, uint crossRun) |

| virtual void | predictAllOutputs (REAL *rawInputs, REAL *outputs, uint nSamples, uint crossRun) |

| virtual void | readSpecificMaps () |

| virtual void | saveWeights (int cross) |

| virtual void | loadWeights (int cross) |

| virtual void | loadMetaWeights (int cross) |

Static Public Member Functions | |

| static string | templateGenerator (int id, string preEffect, int nameID, bool blendStop) |

Private Member Functions | |

| void | trainSingleTree (node *n, deque< nodeReduced > &largestNodes, REAL *input, REAL *inputTmp, REAL *inputTargetsSort, REAL *singleTarget, bool *usedFeatures, int nSamples, int *sortIndex, int *radixTmp0, REAL *radixTmp1) |

| void | cleanTree (node *n) |

| REAL | predictSingleTree (node *n, REAL *input) |

| void | saveTreeRecursive (node *n, fstream &f) |

| void | loadTreeRecursive (node *n, fstream &f) |

Private Attributes | |

| node *** | m_trees |

| int | m_epoch |

| REAL ** | m_globalMean |

| REAL ** | m_treeTargets |

| double ** | m_validationPredictions |

| int | m_featureSubspaceSize |

| int | m_maxTreeLeafes |

| bool | m_useOptSplitPoint |

| bool | m_calculateGlobalMean |

| double | m_lRate |

Detailed Description

Gradient boosted decision tree An extention to: Jerome H. Friedman 1999 "Stochastic Gradient Boosting"The tree is build in a greedy fashion, to minimize the RMSE of the prediction Greedy means that always the largest node gets splitted

Splits can be performed optimally (in terms of RMSE) or random value (uniform sample indices) Subspace size can be selected (number of features considered in a split)

Definition at line 42 of file GBDT.h.

Constructor & Destructor Documentation

| GBDT::GBDT | ( | ) |

Constructor

Definition at line 20 of file GBDT.cpp.

00021 { 00022 cout<<"GBDT"<<endl; 00023 // init member vars 00024 m_featureSubspaceSize = 0; 00025 m_maxTreeLeafes = 0; 00026 m_useOptSplitPoint = true; 00027 m_lRate = 0.0; 00028 m_globalMean = 0; 00029 m_calculateGlobalMean = true; 00030 m_treeTargets = 0; 00031 }

| GBDT::~GBDT | ( | ) |

Member Function Documentation

| void GBDT::cleanTree | ( | node * | n | ) | [private] |

Cleans the tree This should be done after training to remove unneccessary information

- Parameters:

-

n pointer too root node

Definition at line 319 of file GBDT.cpp.

00320 { 00321 if ( n->m_trainSamples ) 00322 { 00323 delete[] n->m_trainSamples; 00324 n->m_trainSamples = 0; 00325 } 00326 n->m_nSamples = 0; 00327 00328 if ( n->m_toSmallerEqual ) 00329 cleanTree ( n->m_toSmallerEqual ); 00330 if ( n->m_toLarger ) 00331 cleanTree ( n->m_toLarger ); 00332 }

| void GBDT::loadMetaWeights | ( | int | cross | ) | [virtual] |

nothing to do in a gradient-descent based algorithm

Implements StandardAlgorithm.

Definition at line 730 of file GBDT.cpp.

| void GBDT::loadTreeRecursive | ( | node * | n, | |

| fstream & | f | |||

| ) | [private] |

Load the tree and all nodes

- Parameters:

-

n current node f referece to the fstream object

Definition at line 741 of file GBDT.cpp.

00742 { 00743 f.read ( ( char* ) n, sizeof ( node ) ); 00744 00745 if ( n->m_toSmallerEqual ) 00746 { 00747 n->m_toSmallerEqual = new node; 00748 loadTreeRecursive ( n->m_toSmallerEqual, f ); 00749 } 00750 if ( n->m_toLarger ) 00751 { 00752 n->m_toLarger = new node; 00753 loadTreeRecursive ( n->m_toLarger, f ); 00754 } 00755 }

| void GBDT::loadWeights | ( | int | cross | ) | [virtual] |

Load the weights and all other parameters and make the model ready to predict

Implements StandardAlgorithm.

Definition at line 693 of file GBDT.cpp.

00694 { 00695 char buf[1024]; 00696 sprintf ( buf,"%02d",cross ); 00697 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile + "." + buf; 00698 cout<<"Load:"<<name<<endl; 00699 fstream f ( name.c_str(), ios::in ); 00700 if ( f.is_open() == false ) 00701 assert ( false ); 00702 00703 // load learnrate 00704 f.read ( ( char* ) &m_lRate, sizeof ( double ) ); 00705 00706 // load number of epochs 00707 f.read ( ( char* ) &m_epoch, sizeof ( int ) ); 00708 00709 // load global means 00710 m_globalMean = new REAL*[m_nCross+1]; 00711 m_globalMean[cross] = new REAL[m_nClass*m_nDomain]; 00712 f.read ( ( char* ) m_globalMean[cross], sizeof ( REAL ) *m_nClass*m_nDomain ); 00713 00714 // allocate and load the trees 00715 m_trees = new node**[m_nCross+1]; 00716 m_trees[cross] = new node*[m_nClass*m_nDomain]; 00717 for ( uint i=0;i<m_nClass*m_nDomain;i++ ) 00718 { 00719 m_trees[cross][i] = new node[m_epoch+1]; 00720 for ( uint j=0;j<m_epoch+1;j++ ) 00721 loadTreeRecursive ( & ( m_trees[cross][i][j] ), f ); 00722 } 00723 00724 f.close(); 00725 }

| void GBDT::modelInit | ( | ) | [virtual] |

Init the GBDT Model

Implements StandardAlgorithm.

Definition at line 58 of file GBDT.cpp.

00059 { 00060 // add tunable parameters 00061 m_epoch = 0; 00062 paramEpochValues.push_back ( &m_epoch ); 00063 paramEpochNames.push_back ( "epoch" ); 00064 00065 if ( m_featureSubspaceSize > m_nFeatures ) 00066 m_featureSubspaceSize = m_nFeatures; 00067 00068 // global mean per target and cross run 00069 m_globalMean = new REAL*[m_nCross+1]; 00070 for ( int i=0;i<m_nCross+1;i++ ) 00071 { 00072 m_globalMean[i] = new REAL[m_nClass*m_nDomain]; 00073 for ( int j=0;j<m_nClass*m_nDomain;j++ ) 00074 m_globalMean[i][j] = 0.0; 00075 } 00076 00077 // used during training to speedup 00078 m_treeTargets = new REAL*[m_nCross+1]; 00079 for ( int i=0;i<m_nCross;i++ ) 00080 m_treeTargets[i] = new REAL[m_trainSize[i]*m_nClass*m_nDomain]; 00081 m_treeTargets[m_nCross] = new REAL[m_trainSize[m_nCross]*m_nClass*m_nDomain]; 00082 00083 // allocate the trees 00084 m_trees = new node**[m_nCross+1]; 00085 for ( int i=0;i<m_nCross+1;i++ ) 00086 { 00087 m_trees[i] = new node*[m_nClass*m_nDomain]; 00088 for ( uint j=0;j<m_nClass*m_nDomain;j++ ) 00089 { 00090 m_trees[i][j] = new node[m_maxTuninigEpochs]; 00091 for ( uint k=0;k<m_maxTuninigEpochs;k++ ) 00092 { 00093 m_trees[i][j][k].m_featureNr = -1; 00094 m_trees[i][j][k].m_value = 1e10; 00095 m_trees[i][j][k].m_toSmallerEqual = 0; 00096 m_trees[i][j][k].m_toLarger = 0; 00097 m_trees[i][j][k].m_trainSamples = 0; 00098 m_trees[i][j][k].m_nSamples = -1; 00099 } 00100 } 00101 } 00102 00103 // allocate mem for predictions of the probe set 00104 if(m_validationType == "ValidationSet") 00105 { 00106 m_validationPredictions = new double*[1]; 00107 m_validationPredictions[0] = new double[m_nClass*m_nDomain*m_validSize]; 00108 for(int i=0;i<m_nClass*m_nDomain*m_validSize;i++) 00109 m_validationPredictions[0][i] = 0.0; 00110 } 00111 else 00112 { 00113 m_validationPredictions = new double*[m_nCross]; 00114 for(int i=0;i<m_nCross;i++) 00115 { 00116 m_validationPredictions[i] = new double[m_nClass*m_nDomain*m_probeSize[i]]; 00117 for(int j=0;j<m_nClass*m_nDomain*m_probeSize[i];j++) 00118 m_validationPredictions[i][j] = 0.0; 00119 } 00120 } 00121 // fix randomness 00122 srand ( Framework::getRandomSeed() ); 00123 }

| void GBDT::modelUpdate | ( | REAL * | input, | |

| REAL * | target, | |||

| uint | nSamples, | |||

| uint | crossRun | |||

| ) | [virtual] |

Make a model update, set the new cross validation set or set the whole training set for retraining

- Parameters:

-

input Pointer to input (can be cross validation set, or whole training set) (rows x nFeatures) target The targets (can be cross validation targets) nSamples The sample size (rows) in input crossRun The cross validation run (for training)

Implements StandardAlgorithm.

Definition at line 204 of file GBDT.cpp.

00205 { 00206 REAL* singleTarget = new REAL[nSamples]; 00207 REAL* inputTmp = new REAL[nSamples*m_featureSubspaceSize]; 00208 REAL* inputTargetsSort = new REAL[nSamples*m_featureSubspaceSize]; 00209 bool* usedFeatures = new bool[m_nFeatures]; 00210 int* sortIndex = new int[nSamples]; 00211 int* radixTmp0 = new int[nSamples]; 00212 REAL* radixTmp1 = new REAL[nSamples]; 00213 00214 if ( crossRun == m_nCross && m_validationType != "ValidationSet") 00215 m_epoch = 0; 00216 00217 uint loopEnd = 1; 00218 if(crossRun==m_nCross && m_validationType != "ValidationSet") 00219 loopEnd = m_epochParamBest[0]+1; 00220 00221 for ( uint boostLoop=0;boostLoop < loopEnd;boostLoop++ ) 00222 { 00223 time_t t0 = time ( 0 ); 00224 if ( crossRun == m_nCross && m_validationType != "ValidationSet" ) 00225 cout<<"epoch:"<<m_epoch<<" "<<flush; 00226 00227 // train the model here 00228 for ( uint i=0;i<m_nClass*m_nDomain;i++ ) // for each target 00229 { 00230 // calc the global mean 00231 if ( m_epoch == 0 ) 00232 { 00233 double sum = 0.0; 00234 if ( m_calculateGlobalMean ) 00235 { 00236 for ( uint j=0;j<nSamples;j++ ) 00237 sum += target[j*m_nClass*m_nDomain+i]; 00238 sum /= ( double ) nSamples; 00239 } 00240 m_globalMean[crossRun][i] = sum; 00241 cout<<"globalMean:"<<sum<<" "<<flush; 00242 00243 for ( uint j=0;j<nSamples;j++ ) 00244 m_treeTargets[crossRun][i*nSamples+j] = target[j*m_nClass*m_nDomain+i] - sum; 00245 } 00246 00247 for ( uint j=0;j<nSamples;j++ ) 00248 singleTarget[j] = m_treeTargets[crossRun][i*nSamples+j]; 00249 00250 deque<nodeReduced> largestNodes; 00251 for ( uint j=0;j<m_nFeatures;j++ ) 00252 usedFeatures[j] = false; 00253 00254 // root node has all examples in the training list 00255 m_trees[crossRun][i][m_epoch].m_trainSamples = new int[nSamples]; 00256 m_trees[crossRun][i][m_epoch].m_nSamples = nSamples; 00257 int* ptr = m_trees[crossRun][i][m_epoch].m_trainSamples; 00258 for ( uint j=0;j<nSamples;j++ ) 00259 ptr[j] = j; 00260 00261 nodeReduced firstNode; 00262 firstNode.m_node = & ( m_trees[crossRun][i][m_epoch] ); 00263 firstNode.m_size = nSamples; 00264 largestNodes.push_back ( firstNode ); 00265 push_heap ( largestNodes.begin(), largestNodes.end(), compareNodeReduced ); 00266 00267 // train the tree loop wise 00268 // call trainSingleTree recursive for the largest node 00269 for ( int j=0;j<m_maxTreeLeafes;j++ ) 00270 { 00271 node* largestNode = largestNodes[0].m_node; 00272 trainSingleTree ( largestNode, largestNodes, input, inputTmp, inputTargetsSort, singleTarget, usedFeatures, nSamples, sortIndex, radixTmp0, radixTmp1 ); 00273 } 00274 00275 //cout<<"["<<(int)largestNodes.size()<<"|"<<flush; 00276 00277 // delete the train lists per node, they are not necessary for prediction 00278 cleanTree ( & ( m_trees[crossRun][i][m_epoch] ) ); 00279 00280 // update the targets/residuals and calc train error 00281 double trainRMSE = 0.0; 00282 //fstream f("tmp/a0.txt",ios::out); 00283 for ( uint j=0;j<nSamples;j++ ) 00284 { 00285 REAL p = predictSingleTree ( & ( m_trees[crossRun][i][m_epoch] ), input+j*m_nFeatures ); 00286 //f<<p<<endl; 00287 m_treeTargets[crossRun][i*nSamples+j] -= m_lRate * p; 00288 double err = m_treeTargets[crossRun][i*nSamples+j]; 00289 trainRMSE += err * err; 00290 } 00291 00292 if ( crossRun == m_nCross ) 00293 cout<<"tRMSE["<<i<<"]:"<<sqrt ( trainRMSE/ ( double ) nSamples ) <<" "<<flush; 00294 //f.close(); 00295 } 00296 00297 if ( crossRun == m_nCross && boostLoop < m_epochParamBest[0] && m_validationType != "ValidationSet") 00298 { 00299 cout<<time ( 0 )-t0<<"[s]"<<endl; 00300 m_epoch++; 00301 } 00302 } 00303 delete[] usedFeatures; 00304 delete[] singleTarget; 00305 delete[] inputTmp; 00306 delete[] inputTargetsSort; 00307 delete[] sortIndex; 00308 delete[] radixTmp0; 00309 delete[] radixTmp1; 00310 00311 }

| void GBDT::predictAllOutputs | ( | REAL * | rawInputs, | |

| REAL * | outputs, | |||

| uint | nSamples, | |||

| uint | crossRun | |||

| ) | [virtual] |

Prediction for outside use, predicts outputs based on raw input values

- Parameters:

-

rawInputs The input feature, without normalization (raw) outputs The output value (prediction of target) nSamples The input size (number of rows) crossRun Number of cross validation run (in training)

Implements StandardAlgorithm.

Definition at line 133 of file GBDT.cpp.

00134 { 00135 bool mode = Framework::getFrameworkMode(); 00136 // predict all values in: REAL* outputs 00137 for ( uint i=0;i<nSamples;i++ ) 00138 { 00139 REAL* ptr = rawInputs + i * m_nFeatures; 00140 00141 // all REAL targets 00142 for ( uint j=0;j<m_nClass*m_nDomain;j++ ) 00143 { 00144 if((crossRun >= m_nCross && m_validationType != "ValidationSet") || mode) 00145 { 00146 double sum = m_globalMean[crossRun][j]; 00147 // for every boosting epoch : CORRECT, but slower 00148 for ( int k=0;k<m_epoch+1;k++ ) 00149 { 00150 REAL v = predictSingleTree ( & ( m_trees[crossRun][j][k] ), ptr ); 00151 sum += m_lRate * v; // this is gradient boosting 00152 } 00153 outputs[i*m_nClass*m_nDomain + j] = sum; 00154 } 00155 else 00156 { 00157 // prediction from previous trees 00158 double sum = m_validationPredictions[crossRun][i*m_nClass*m_nDomain + j]; 00159 double v = predictSingleTree ( & ( m_trees[crossRun][j][m_epoch] ), ptr ); 00160 sum += m_lRate * v; // this is gradient boosting 00161 m_validationPredictions[crossRun][i*m_nClass*m_nDomain + j] = sum; 00162 outputs[i*m_nClass*m_nDomain + j] = sum + (double)m_globalMean[crossRun][j]; 00163 } 00164 } 00165 } 00166 }

| REAL GBDT::predictSingleTree | ( | node * | n, | |

| REAL * | input | |||

| ) | [private] |

Make a prediction from a tree

- Parameters:

-

n The pointer to the root node input input feature

- Returns:

- prediction value

Definition at line 175 of file GBDT.cpp.

00176 { 00177 // here, on a leaf: predict the constant value 00178 if ( n->m_toSmallerEqual == 0 && n->m_toLarger == 0 ) 00179 return n->m_value; 00180 00181 int nr = n->m_featureNr; 00182 /*if(nr < 0 || nr >= m_nFeatures) 00183 { 00184 cout<<endl<<"Feature nr: "<<nr<<" (max:"<<m_nFeatures<<")"<<endl; 00185 assert(false); 00186 }*/ 00187 REAL thresh = n->m_value; 00188 REAL feature = input[nr]; 00189 00190 if ( feature <= thresh ) 00191 return predictSingleTree ( n->m_toSmallerEqual, input ); 00192 return predictSingleTree ( n->m_toLarger, input ); 00193 }

| void GBDT::readSpecificMaps | ( | ) | [virtual] |

Read the Algorithm specific values from the description file

Implements StandardAlgorithm.

Definition at line 45 of file GBDT.cpp.

00046 { 00047 m_featureSubspaceSize = m_intMap["featureSubspaceSize"]; 00048 m_maxTreeLeafes = m_intMap["maxTreeLeafes"]; 00049 m_useOptSplitPoint = m_boolMap["useOptSplitPoint"]; 00050 m_calculateGlobalMean = m_boolMap["calculateGlobalMean"]; 00051 m_lRate = m_doubleMap["lRate"]; 00052 }

| void GBDT::saveTreeRecursive | ( | node * | n, | |

| fstream & | f | |||

| ) | [private] |

Save the tree and all nodes

- Parameters:

-

n current node f referece to the fstream object

Definition at line 680 of file GBDT.cpp.

00681 { 00682 f.write ( ( char* ) n, sizeof ( node ) ); 00683 if ( n->m_toSmallerEqual ) 00684 saveTreeRecursive ( n->m_toSmallerEqual, f ); 00685 if ( n->m_toLarger ) 00686 saveTreeRecursive ( n->m_toLarger, f ); 00687 }

| void GBDT::saveWeights | ( | int | cross | ) | [virtual] |

Save the weights and all other parameters for load the complete prediction model

Implements StandardAlgorithm.

Definition at line 648 of file GBDT.cpp.

00649 { 00650 char buf[1024]; 00651 sprintf ( buf,"%02d",cross ); 00652 string name = m_datasetPath + "/" + m_tempPath + "/" + m_weightFile + "." + buf; 00653 if ( m_inRetraining ) 00654 cout<<"Save:"<<name<<endl; 00655 fstream f ( name.c_str(), ios::out ); 00656 00657 // save learnrate 00658 f.write ( ( char* ) &m_lRate, sizeof ( double ) ); 00659 00660 // save number of epochs 00661 f.write ( ( char* ) &m_epoch, sizeof ( int ) ); 00662 00663 // save global means 00664 f.write ( ( char* ) m_globalMean[cross], sizeof ( REAL ) *m_nClass*m_nDomain ); 00665 00666 // save trees 00667 for ( int i=0;i<m_nClass*m_nDomain;i++ ) 00668 for ( int j=0;j<m_epoch+1;j++ ) 00669 saveTreeRecursive ( & ( m_trees[cross][i][j] ), f ); 00670 00671 f.close(); 00672 }

| string GBDT::templateGenerator | ( | int | id, | |

| string | preEffect, | |||

| int | nameID, | |||

| bool | blendStop | |||

| ) | [static] |

Generates a template of the description file

- Returns:

- The template string

Definition at line 762 of file GBDT.cpp.

00763 { 00764 stringstream s; 00765 s<<"ALGORITHM=GBDT"<<endl; 00766 s<<"ID="<<id<<endl; 00767 s<<"TRAIN_ON_FULLPREDICTOR="<<preEffect<<endl; 00768 s<<"DISABLE=0"<<endl; 00769 s<<endl; 00770 s<<"[int]"<<endl; 00771 s<<"minTuninigEpochs=20"<<endl; 00772 s<<"maxTuninigEpochs=100"<<endl; 00773 s<<"featureSubspaceSize=10"<<endl; 00774 s<<"maxTreeLeafs=100"<<endl; 00775 s<<endl; 00776 s<<"[double]"<<endl; 00777 s<<"initMaxSwing=1.0"<<endl; 00778 s<<"lRate=0.1"<<endl; 00779 s<<endl; 00780 s<<"[bool]"<<endl; 00781 s<<"calculateGlobalMean=1"<<endl; 00782 s<<"useOptSplitPoint=1"<<endl; 00783 s<<"enableClipping=0"<<endl; 00784 s<<"enableTuneSwing=0"<<endl; 00785 s<<endl; 00786 s<<"minimzeProbe="<< ( !blendStop ) <<endl; 00787 s<<"minimzeProbeClassificationError=0"<<endl; 00788 s<<"minimzeBlend="<<blendStop<<endl; 00789 s<<"minimzeBlendClassificationError=0"<<endl; 00790 s<<endl; 00791 s<<"[string]"<<endl; 00792 s<<"weightFile="<<"GBDT_"<<nameID<<"_weights.dat"<<endl; 00793 s<<"fullPrediction=GBDT_"<<nameID<<".dat"<<endl; 00794 00795 return s.str(); 00796 }

| void GBDT::trainSingleTree | ( | node * | n, | |

| deque< nodeReduced > & | largestNodes, | |||

| REAL * | input, | |||

| REAL * | inputTmp, | |||

| REAL * | inputTargetsSort, | |||

| REAL * | singleTarget, | |||

| bool * | usedFeatures, | |||

| int | nSamples, | |||

| int * | sortIndex, | |||

| int * | radixTmp0, | |||

| REAL * | radixTmp1 | |||

| ) | [private] |

Train a single tree with determine split criteria etc.

- Parameters:

-

n current node largestNodes reference to the largest nodes deque input input feature inputTmp input feature array (tmp usage) inputTargetsSort sorted targets (tmp usage) singleTarget one target usedFeatures feature mask, used for random feature subspace nSamples number of samples sortIndex randomized indices

Definition at line 347 of file GBDT.cpp.

00348 { 00349 // break criteria: tree size limit or too less training samples 00350 int nS = largestNodes.size(); 00351 if ( nS >= m_maxTreeLeafes || n->m_nSamples <= 1 ) 00352 return; 00353 00354 // delete the current node (is currently the largest element in the heap) 00355 if ( largestNodes.size() > 0 ) 00356 { 00357 //largestNodes.pop_front(); 00358 pop_heap ( largestNodes.begin(),largestNodes.end(),compareNodeReduced ); 00359 largestNodes.pop_back(); 00360 } 00361 00362 // the number of training samples in this node 00363 int nNodeSamples = n->m_nSamples; 00364 00365 // this tmp array is used to fast drawing a random subset 00366 int *randFeatureIDs = new int[m_featureSubspaceSize]; 00367 if ( m_featureSubspaceSize < m_nFeatures ) // select a random feature subset 00368 { 00369 for ( uint i=0;i<m_featureSubspaceSize;i++ ) 00370 { 00371 uint idx = rand() % m_nFeatures; 00372 while ( usedFeatures[idx] ) 00373 idx = rand() % m_nFeatures; 00374 randFeatureIDs[i] = idx; 00375 usedFeatures[idx] = true; 00376 } 00377 } 00378 else // take all features 00379 for ( uint i=0;i<m_featureSubspaceSize;i++ ) 00380 randFeatureIDs[i] = i; 00381 00382 // precalc sums and squared sums of targets 00383 double sumTarget = 0.0, sum2Target = 0.0; 00384 for ( uint j=0;j<nNodeSamples;j++ ) 00385 { 00386 REAL v = singleTarget[n->m_trainSamples[j]]; 00387 sumTarget += v; 00388 sum2Target += v*v; 00389 } 00390 00391 int bestFeature = -1, bestFeaturePos = -1; 00392 double bestFeatureRMSE = 1e10; 00393 REAL bestFeatureLow = 1e10, bestFeatureHi = 1e10; 00394 REAL optFeatureSplitValue = 1e10; 00395 00396 // search optimal split point in all tmp input features 00397 for ( int i=0;i<m_featureSubspaceSize;i++ ) 00398 { 00399 // search the optimal split value, which reduce the RMSE the most 00400 REAL optimalSplitValue = 0.0; 00401 double rmseBest = 1e10; 00402 REAL meanLowBest = 1e10, meanHiBest = 1e10; 00403 int bestPos = -1; 00404 double sumLow = 0.0, sum2Low = 0.0, sumHi = sumTarget, sum2Hi = sum2Target, cntLow = 0.0, cntHi = nNodeSamples; 00405 REAL* ptrInput = inputTmp + i * nNodeSamples; 00406 REAL* ptrTarget = inputTargetsSort + i * nNodeSamples; 00407 00408 // copy 00409 int nr = randFeatureIDs[i]; 00410 for ( int j=0;j<nNodeSamples;j++ ) 00411 ptrInput[j] = input[nr+n->m_trainSamples[j]*m_nFeatures]; 00412 00413 if ( m_useOptSplitPoint == false ) // random threshold value 00414 { 00415 for ( int j=0;j<nNodeSamples;j++ ) 00416 ptrTarget[j] = singleTarget[n->m_trainSamples[j]]; 00417 00418 REAL* ptrInput = inputTmp + i * nNodeSamples; 00419 bestPos = rand() % nNodeSamples; 00420 optimalSplitValue = ptrInput[bestPos]; 00421 sumLow = 0.0; 00422 sum2Low = 0.0; 00423 cntLow = 0.0; 00424 sumHi = 0.0; 00425 sum2Hi = 0.0; 00426 cntHi = 0.0; 00427 for ( int j=0;j<nNodeSamples;j++ ) 00428 { 00429 REAL v = ptrInput[j]; 00430 REAL t = ptrTarget[j]; 00431 if ( ptrInput[j] <= optimalSplitValue ) 00432 { 00433 sumLow += t; 00434 sum2Low += t*t; 00435 cntLow += 1.0; 00436 } 00437 else 00438 { 00439 sumHi += t; 00440 sum2Hi += t*t; 00441 cntHi += 1.0; 00442 } 00443 } 00444 rmseBest = ( sum2Low/cntLow - ( sumLow/cntLow ) * ( sumLow/cntLow ) ) *cntLow; 00445 rmseBest += ( sum2Hi/cntHi - ( sumHi/cntHi ) * ( sumHi/cntHi ) ) *cntHi; 00446 rmseBest = sqrt ( rmseBest/ ( cntLow+cntHi ) ); 00447 meanLowBest = sumLow/cntLow; 00448 meanHiBest = sumHi/cntHi; 00449 } 00450 else // search for the optimal threshold value, goal: best RMSE reduction split 00451 { 00452 // fast sort of the input dimension 00453 for ( int j=0;j<nNodeSamples;j++ ) 00454 sortIndex[j] = j; 00455 00456 //SORT_ASCEND_I ( ptrInput, sortIndex, nNodeSamples ); 00457 //for ( int j=0;j<nNodeSamples;j++ ) 00458 // ptrTarget[j] = singleTarget[n->m_trainSamples[sortIndex[j]]]; 00459 00460 /*vector<pair<REAL,int> > list(nNodeSamples); 00461 for(int j=0;j<nNodeSamples;j++) 00462 { 00463 list[j].first = ptrInput[j]; 00464 list[j].second = sortIndex[j]; 00465 } 00466 sort(list.begin(),list.end()); 00467 for(int j=0;j<nNodeSamples;j++) 00468 { 00469 ptrInput[j] = list[j].first; 00470 sortIndex[j] = list[j].second; 00471 } 00472 for ( int j=0;j<nNodeSamples;j++ ) 00473 ptrTarget[j] = singleTarget[n->m_trainSamples[sortIndex[j]]]; 00474 */ 00475 00476 SORT_ASCEND_RADIX_I ( ptrInput, sizeof(REAL), sortIndex, radixTmp0, nNodeSamples); 00477 for(int j=0;j<nNodeSamples;j++) 00478 radixTmp1[j] = ptrInput[j]; 00479 for(int j=0;j<nNodeSamples;j++) 00480 { 00481 int idx = sortIndex[j]; 00482 ptrInput[j] = radixTmp1[idx]; 00483 ptrTarget[j] = singleTarget[n->m_trainSamples[idx]]; 00484 } 00485 00486 int j = 0; 00487 while ( j < nNodeSamples-1 ) 00488 { 00489 REAL t = ptrTarget[j]; 00490 sumLow += t; 00491 sum2Low += t*t; 00492 sumHi -= t; 00493 sum2Hi -= t*t; 00494 cntLow += 1.0; 00495 cntHi -= 1.0; 00496 00497 REAL v0 = ptrInput[j], v1 = 1e10; 00498 if ( j < nNodeSamples -1 ) 00499 v1 = ptrInput[j+1]; 00500 if ( v0 == v1 ) // skip equal successors 00501 { 00502 j++; 00503 continue; 00504 } 00505 00506 double rmse = ( sum2Low/cntLow - ( sumLow/cntLow ) * ( sumLow/cntLow ) ) *cntLow; 00507 rmse += ( sum2Hi/cntHi - ( sumHi/cntHi ) * ( sumHi/cntHi ) ) *cntHi; 00508 rmse = sqrt ( rmse/ ( cntLow+cntHi ) ); 00509 00510 if ( rmse < rmseBest ) 00511 { 00512 optimalSplitValue = v0; 00513 rmseBest = rmse; 00514 bestPos = j+1; 00515 meanLowBest = sumLow/cntLow; 00516 meanHiBest = sumHi/cntHi; 00517 } 00518 00519 j++; 00520 } 00521 } 00522 00523 if ( rmseBest < bestFeatureRMSE ) 00524 { 00525 bestFeature = i; 00526 bestFeaturePos = bestPos; 00527 bestFeatureRMSE = rmseBest; 00528 optFeatureSplitValue = optimalSplitValue; 00529 bestFeatureLow = meanLowBest; 00530 bestFeatureHi = meanHiBest; 00531 } 00532 } 00533 00534 // unmark the selected inputs 00535 for ( uint i=0;i<m_nFeatures;i++ ) 00536 usedFeatures[i] = false; 00537 00538 n->m_featureNr = randFeatureIDs[bestFeature]; 00539 n->m_value = optFeatureSplitValue; 00540 00541 delete[] randFeatureIDs; 00542 00543 if ( n->m_featureNr < 0 || n->m_featureNr >= m_nFeatures ) 00544 { 00545 cout<<"f="<<n->m_featureNr<<endl; 00546 assert ( false ); 00547 } 00548 00549 // count the samples of the low node 00550 int cnt = 0; 00551 for ( int i=0;i<nNodeSamples;i++ ) 00552 { 00553 int nr = n->m_featureNr; 00554 if ( input[nr + n->m_trainSamples[i]*m_nFeatures] <= optFeatureSplitValue ) 00555 cnt++; 00556 } 00557 00558 int* lowList = new int[cnt]; 00559 int* hiList = new int[nNodeSamples-cnt]; 00560 if ( cnt == 0 ) 00561 lowList = 0; 00562 if ( nNodeSamples-cnt == 0 ) 00563 hiList = 0; 00564 00565 int lowCnt = 0, hiCnt = 0; 00566 double lowMean = 0.0, hiMean = 0.0; 00567 for ( int i=0;i<nNodeSamples;i++ ) 00568 { 00569 int nr = n->m_featureNr; 00570 if ( input[nr + n->m_trainSamples[i]*m_nFeatures] <= optFeatureSplitValue ) 00571 { 00572 lowList[lowCnt] = n->m_trainSamples[i]; 00573 lowMean += singleTarget[n->m_trainSamples[i]]; 00574 lowCnt++; 00575 } 00576 else 00577 { 00578 hiList[hiCnt] = n->m_trainSamples[i]; 00579 hiMean += singleTarget[n->m_trainSamples[i]]; 00580 hiCnt++; 00581 } 00582 } 00583 lowMean /= lowCnt; 00584 hiMean /= hiCnt; 00585 00586 if ( hiCnt+lowCnt != nNodeSamples || lowCnt != cnt ) 00587 assert ( false ); 00588 00589 //cout<<" #low:"<<lowCnt<<" #hi:"<<hiCnt<<" lowMean:"<<lowMean<<" highMean:"<<hiMean<<endl; 00590 00591 // break, if too less samples 00592 if ( lowCnt < 1 || hiCnt < 1 ) 00593 { 00594 n->m_featureNr = -1; 00595 n->m_value = lowCnt < 1 ? hiMean : lowMean; 00596 n->m_toSmallerEqual = 0; 00597 n->m_toLarger = 0; 00598 if ( n->m_trainSamples ) 00599 delete[] n->m_trainSamples; 00600 n->m_trainSamples = 0; 00601 n->m_nSamples = 0; 00602 00603 nodeReduced currentNode; 00604 currentNode.m_node = n; 00605 currentNode.m_size = 0; 00606 largestNodes.push_back ( currentNode ); 00607 push_heap ( largestNodes.begin(), largestNodes.end(), compareNodeReduced ); 00608 00609 return; 00610 } 00611 00612 // prepare first new node 00613 n->m_toSmallerEqual = new node; 00614 n->m_toSmallerEqual->m_featureNr = -1; 00615 n->m_toSmallerEqual->m_value = lowMean; 00616 n->m_toSmallerEqual->m_toSmallerEqual = 0; 00617 n->m_toSmallerEqual->m_toLarger = 0; 00618 n->m_toSmallerEqual->m_trainSamples = lowList; 00619 n->m_toSmallerEqual->m_nSamples = lowCnt; 00620 00621 // prepare second new node 00622 n->m_toLarger = new node; 00623 n->m_toLarger->m_featureNr = -1; 00624 n->m_toLarger->m_value = hiMean; 00625 n->m_toLarger->m_toSmallerEqual = 0; 00626 n->m_toLarger->m_toLarger = 0; 00627 n->m_toLarger->m_trainSamples = hiList; 00628 n->m_toLarger->m_nSamples = hiCnt; 00629 00630 // add the new two nodes to the heap 00631 nodeReduced lowNode, hiNode; 00632 lowNode.m_node = n->m_toSmallerEqual; 00633 lowNode.m_size = lowCnt; 00634 hiNode.m_node = n->m_toLarger; 00635 hiNode.m_size = hiCnt; 00636 00637 largestNodes.push_back ( lowNode ); 00638 push_heap ( largestNodes.begin(), largestNodes.end(), compareNodeReduced ); 00639 00640 largestNodes.push_back ( hiNode ); 00641 push_heap ( largestNodes.begin(), largestNodes.end(), compareNodeReduced ); 00642 }

The documentation for this class was generated from the following files:

1.5.8

1.5.8